Prepare, Don’t Panic:

Synthetic Media and Deepfakes

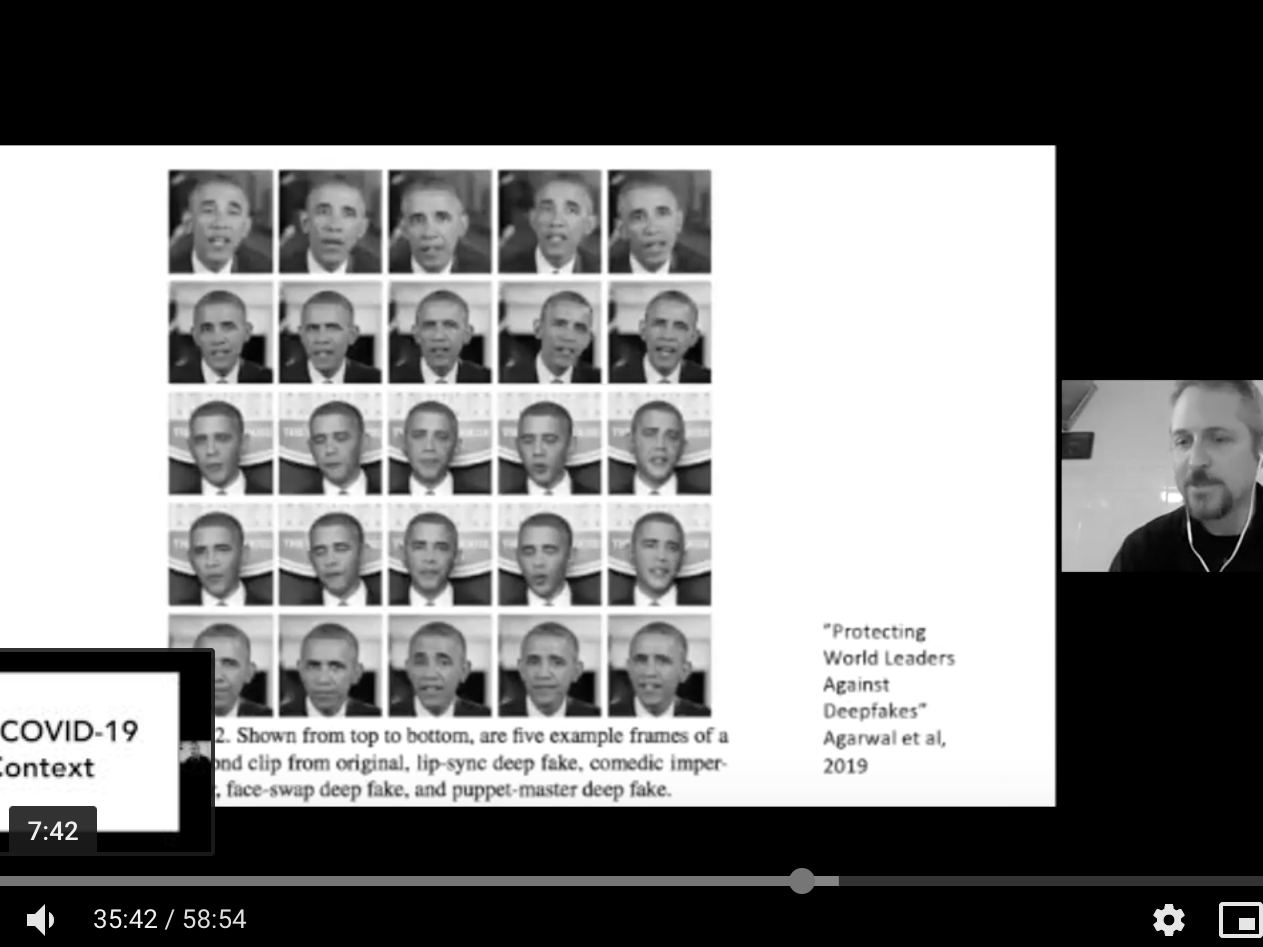

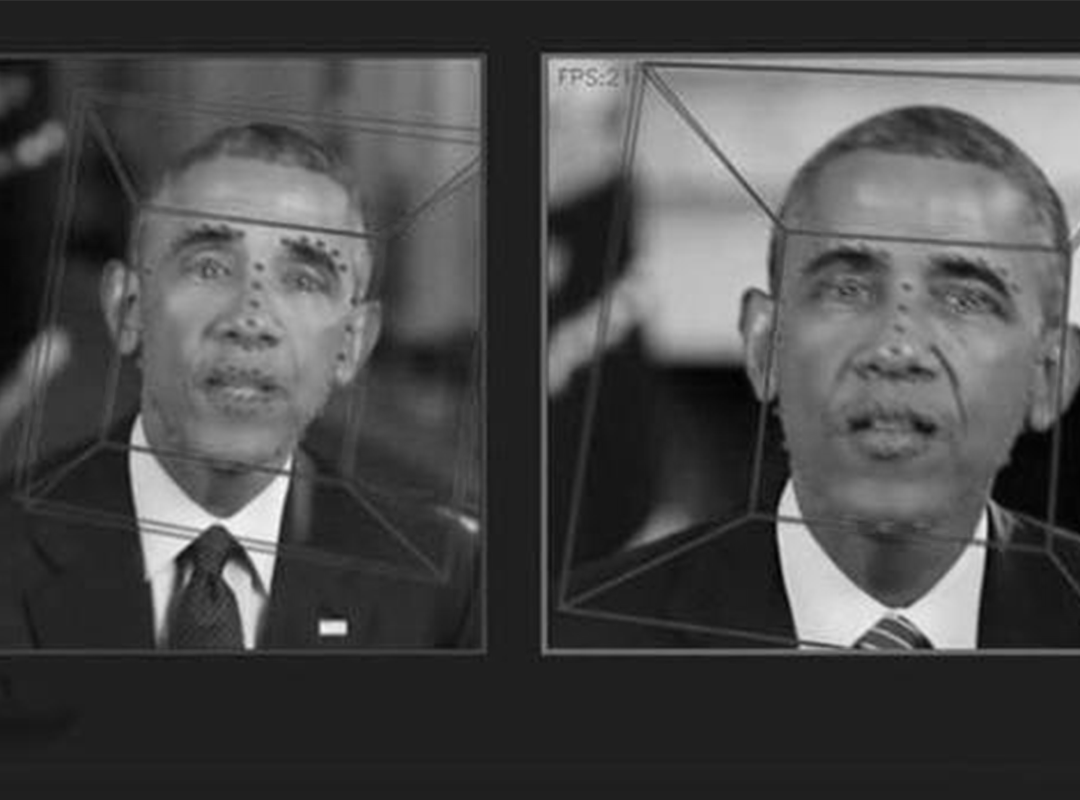

This project focuses around the emerging and potential malicious uses of so-called “deepfakes” and other forms of AI-generated “synthetic media” and how we push back to defend evidence, the truth and freedom of expression from a global, human rights-led perspective. This work is embedded in a broader initiative focused on proactive approaches to protecting and upholding marginal voices and human rights as emerging technologies such as AI intersect with the pressures of disinformation, media manipulation, and rising authoritarianism.

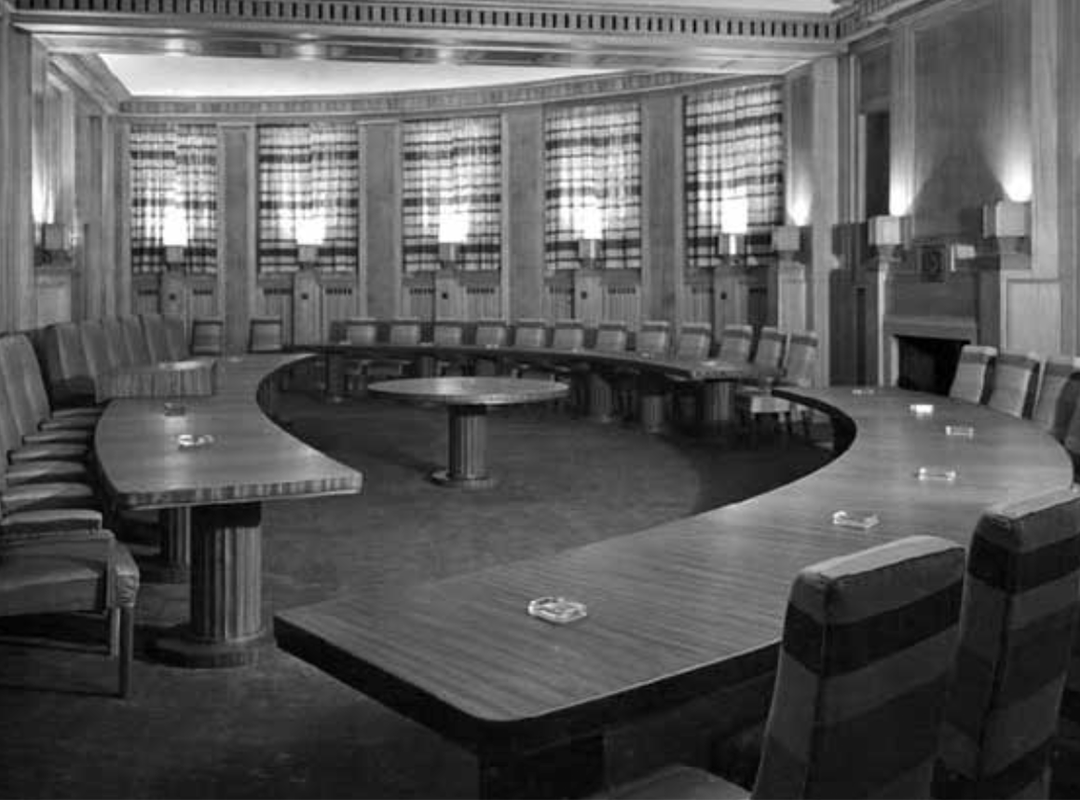

Our work launched in 2018 with the first multi-disciplinary convening around deepfakes preparedness: Check out the report based from the “Mal-uses of AI-generated Synthetic Media and Deepfakes: Pragmatic Solutions Discovery Convening”. For further reports from our series of global meetings please see below.

Twelve things we can do now to prepare for deepfakes

1

De-escalate rhetoric

and recognize that this is an evolution, not a rupture of existing problems – and that our words create many of the harms we fear.

2

Name and address existing harms

from gender-based violence and cyber bullying.

3

Inclusion and human rights

Demand responses reflect, and be shaped by, a global and inclusive approach, as well as by a shared human rights vision.

4

Global threat models

Identify threat models and desired solutions from a global perspective.

5

Building on existing expertise

Promote cross-disciplinary and multiple solution approaches, building on existing expertise in misinformation, fact-checking, and OSINT.

6

Connective tissue

Empower key frontline actors like media and civil liberties groups to better understand the threat and connect to other stakeholders/experts.

7

Coordination

Identify appropriate coordination mechanisms between civil society, media, and technology platforms around the use of synthetic media.

8

Research

Support research into how to communicate ‘invisible-to-the-eye’ video manipulation and simulation to the public.

9

Platform and tool-maker responsibility

Determine what we want and don’t want from platforms and companies commercializing tools or acting as channels for distribution, including in terms of authentication tools, manipulation detection tools, and content moderation based on what platforms find.

10

Equity in detection access

Prioritize global equity in access to detection systems and advocate that investment in detection matches investment in synthetic media creation approaches.

11

Shape debate on infrastructure choices

and understand the pros and cons of who globally will be included, excluded, censored, silenced, and empowered by the choices we make on authenticity or content moderation, and the infrastructure we build for this.

12

Promote ethical standards

on usage in political and civil society campaigning.

Featured reports and blogs

Reports from the only Global South-focused and global meetings on threats and solution prioritization

Intervening with research and advocacy to ensure emerging authenticity infrastructure reflects key global dilemmas

- Pre-Empting a Crisis: Deepfake Detection Skills + Global Access to Media Forensics Tools

- Ticks or It Didn’t Happen Report: Confronting Key Dilemmas in Authenticity Infrastructure for Multimedia

- Tracing Trust: Why We Must Build Authenticity Infrastructure That Works for All

- The Adobe Content Authenticity Initiative approach to authenticity infrastructure against media manipulation

- Tracing Trust video series

- “Ticks or It Didn”t Happen”: Ensuring emerging authenticity infrastructure helps, not hurts global human rights and free expression (video)

Op-eds on key issues

Resources & analysis

WIRED: HOW DEEPFAKE FEARS UNDERMINE TRUE VIDEOOP-ED |

In WIRED, why increasing conspiratorial thinking on videos (“it’s a deepfake”, it’s manipulated) compromises critical truthful videos showing realities in countries like Myanmar. We need different responses to engage with video/photo manipulation, based on what WITNESS heard in global research. |

DEEPFAKES PREPARE NOW: REPORT FROM 1st SOUTHEAST ASIA EXPERT MEETINGREPORT |

MANIPULATED MEDIA DETECTION: PRIORITIESVIDEO |

#TRACINGTRUST VIDEO SERIESVIDEO |

BACKGROUNDER: DEEPFAKES IN 2021BLOG POST |

SOUTH AFRICA DEEPFAKES WORKSHOP: FULL REPORTREPORT |

HOW EDUCATIONAL INITIATIVES CAN FIGHT DISINFOVIDEO |

TWITTER RELEASED A DRAFT POLICY ON SYNTHETIC MEDIA. HERE'S WHAT STOOD OUT TO ACTIVISTS.BLOG POST |

TO FIGHT DEEPFAKES BUILD MEDIA LITERACY, SAY AFRICAN ACTIVISTSBLOG POST |

PREPARING FOR DEEPFAKES AGAINST JOURNALISMCASE STUDY |

The European Broadcasting Union published its annual report on news trends, which includes our case study on deepfakes and journalism. The case study also includes a short video (requires login for viewing). |

DEEPFAKES: PREPARE NOW (PERSPECTIVES FROM BRAZIL)REPORT |

DEEPFAKES AND SYNTHETIC MEDIA: UPDATED SURVEY OF SOLUTIONS AGAINST MALICIOUS USAGESBLOG POST |

PREPARE, DON'T PANIC: DEALING WITH DEEPFAKES AND OTHER SYNTHETIC MEDIAVIDEO |

HEARD ABOUT DEEPFAKES? DON'T PANIC. PREPAREBLOG POST |

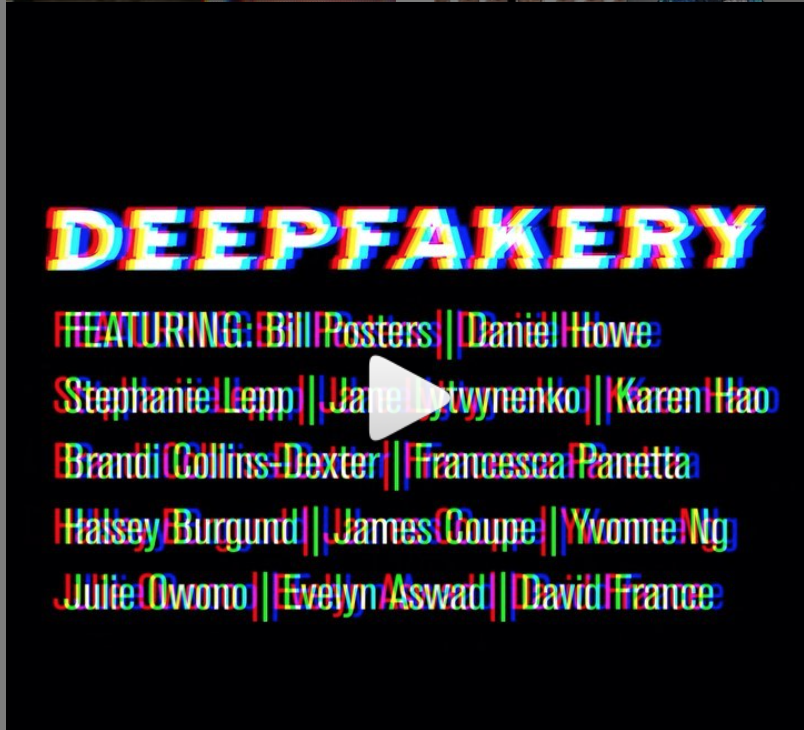

DEEPFAKERY: SATIRE, HUMAN RIGHTS, ART AND JOURNALISM IN A TIME OF INFODEMICSVIDEO TALK SERIES |

ASSESSING THE ADOBE CONTENT AUTHENTICITY INITIATIVEBLOG |

ASSESSING THE ADOBE CONTENT AUTHENTICITY INITIATIVEBLOG |

DATA JOURNALISM HANDBOOK: THINKING ABOUT DEEPFAKESBOOK CHAPTER |

ENSURING AUTHENTICITY INFRASTRUCTURE HELPS, NOT HURTSVIDEO |

WHY WE MUST BUILD AUTHENTICITY INFRASTRUCTURE THAT WORKS FOR ALLBLOG POST |

TALK: A.I. MIS/DISINFORMATION – DON'T PANIC, PREPAREVIDEO |

CORONAVIRUS AND HUMAN RIGHTS: PREPARING WITNESS'S RESPONSEBLOG POST |

IN AFRICA, FEAR OF STATE VIOLENCE INFORMS DEEPFAKE THREATBLOG POST |

HOW DO WE WORK TOGETHER TO DETECT AI-MANIPULATED MEDIA?REPORT |

A HORA E A VEZ DAS DEEPFAKES NO BRASIL E NO MUNDOOP-ED |

AI TALK: DETECTING DEEPFAKESVIDEO |

SXSW 2019 - DEEPFAKES: WHAT WE SHOULD FEAR, WHAT CAN WE DOAUDIO |

DEEPFAKES AND SYNTHETIC MEDIA: WHAT SHOULD WE FEAR? WHAT CAN WE DO?BLOG POST |

“MAL-USES OF AI-GENERATED SYNTHETIC MEDIA + DEEPFAKES: PRAGMATIC SOLUTIONS DISCOVERY CONVENING”REPORT |

WITNESS LEADS CONVENING ON PROACTIVE SOLUTIONS TO MAL-USES OF DEEPFAKES AND OTHER AI-GENERATED SYNTHETIC MEDIANEWS |

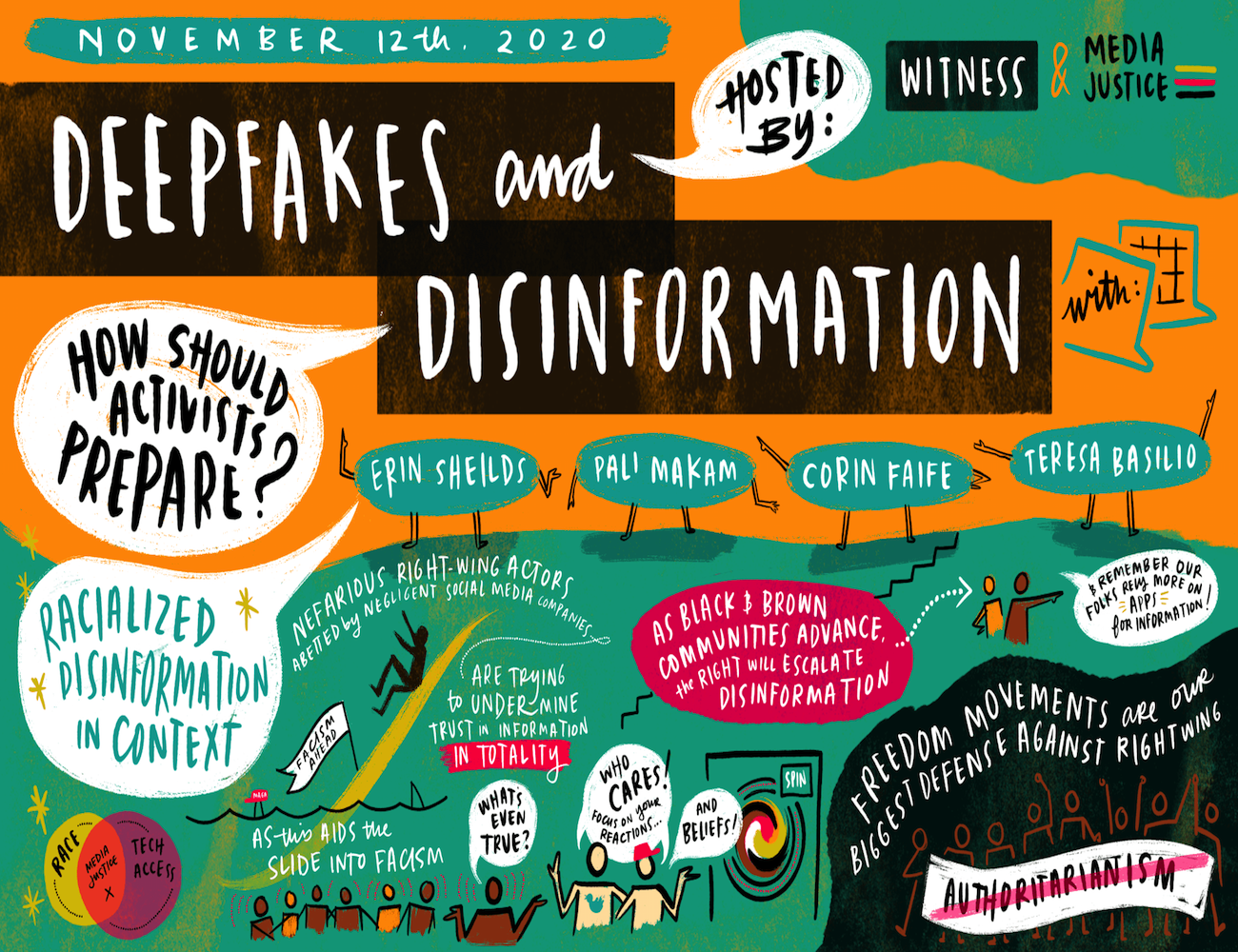

HOW CAN US ACTIVISTS CONFRONT DEEPFAKES AND VISUAL DISINFORMATION?WORKSHOP |

CONTENT AUTHENTICITY INITIATIVE WHITE PAPER: WITNESS CO-AUTHORSWHITE PAPER LAUNCH |

PREPARING FOR DEEPFAKES: RUSSIAN TRANSLATED WEBINARVIDEO |

CONVERSATIONS WITH DATA: DETECTING DEEPFAKESPODCAST |

WHAT'S NEEDED IN DEEPFAKES DETECTIONBLOG POST |

LIVESTREAM Q&A: CONTENT AUTHENTICITY EXPLAINEDVIDEO |

THE PROS AND CONS OF FACEBOOK'S NEW DEEPFAKES POLICYBLOG POST |

MAJOR BRAZILIAN PRESS COVERS WITNESS RECOMMENDATIONS ON HOW TO PREPARE BETTER BASED ON RECENT EXPERT MEETINGSPRESS |

Articles in Estadão and Folha de S.Paulo. View image of Folha de S.Paulo piece. For more information on our press coverage in Brazil, click here. |

GOVERNING DEEPFAKES DETECTION TO ENSURE SUPPORTS GLOBAL NEEDSNEWS |

PROTECTING PUBLIC DISCOURSE FROM AI-GENERATED MIS/DISINFORMATIONBLOG POST |

DEEPFAKES WILL CHALLENGE PUBLIC TRUST IN WHAT’S REAL. HERE’S HOW TO DEFUSE THEM.OP-ED |

DEEPFAKES AND SYNTHETIC MEDIA: SURVEY OF SOLUTIONS AGAINST MALICIOUS USAGESBLOG POST |

In the news…

- WIRED Op-Ed: ‘Authoritarian Regimes Could Exploit Cries of ‘Deepfake’

- An unintended consequence: can deepfakes kill video evidence? (CyberNews)

- Cybernews: ‘Kill, laugh, love: what should we do with deepfakes‘

- WITNESS was widely quoted around the mistaken emphasis of the ‘alternative Xmas’ message from national channel, Channel 4 in the UK, which involved a deepfake of the Queen. Coverage included the Guardian, CNN, Forbes, Business Insider

- WITNESS cited as resource on SIGGRAPH podcast on deepfakes

- Are deepfakes breaking our grip on reality?, Al-Jazeera The Stream

- Deepfake bot on Telegram is violating women by forging nudes from regular pics, CNET highlights WITNESS point-of-vew

- New Photoshop ‘Content Attribution’ Tool Tracks Edit History and Prevents Photo Theft, in PetaPixel notes WITNESS involvement

- Qualcomm announces photo verification tool, NBC News

- WITNESS quoted in Boston Globe OpEd “We made a realistic deepfake, and here’s why we’re worried”

- Immerse covered the launch of the Deepfakery series

- Wired, The Verge, TechCrunch articles on CAI highlight values we pushed for as does Adobe launch blog

- The Verge references WITNESS and Guardian Project in relation to CAI, “Some Photoshop users can try Adobe’s anti-misinformation system later this year”

- PhotoFocus highlights human rights workflow integrated into Adobe CAI, “Adobe releases further details of the Content Authenticity Initiative”

- CAI Achieves Milestone: White Paper Sets the Standard for Content Attribution, Adobe Blog, WITNESS role in setting white paper standards highlighted

- WITNESS spoke to Agencia Publica (Brazil) about popularization of deepfakes-creating apps in Brazil and key issues with them: “Yes, nós temos deepfake: brasileiros são o 2º maior público de aplicativo que “troca rostos” de políticos e celebridades”

- MIT Sloan Explained references our framing on deepfakes, “Deepfakes, explained”.

- WITNESS spoke to EuroNews, “Facebook labels a second manipulated video of Nancy Pelosi video as “partly false”.

- Scientific American documentary and accompanying article features WITNESS https://www.scientificamerican.com/article/a-nixon-deepfake-a-moon-disaster-speech-and-an-information-ecosystem-at-risk/

- Craig Silverman interviewed WITNESS on deepfakes for the European Journalism Centre podcast, ‘Conversations with Data’

- Sam Gregory discussed the potential of deepfakes to be used to protect people in relation to a new documentary on LGBTQ people in Chechnya, Vox, ‘How deepfakes could actually do some good: A new documentary highlights how the controversial technology can protect people.’

- Rappler quoted WITNESS on Beyond ‘false’ labels, experts call for broader access to fact-checking tools.

- MIT Tech review quoted WITNESS in discussing the Facebook et al Deepfakes Detection Challenge, Facebook just released a database of 100,000 deepfakes to teach AI how to spot them, on the necessity of preparing and current reality of gender-based violence via deepfakes

- WIRED quoted WITNESS on Trump’s Tweets Force Twitter Into a High-Wire Act, highlighting the need for clear, principled stands on public figures and for caution about takedowns and removals.

-

Harmony Labs Podcast, “The Revolution Will Be Synthesized”

-

Knowable Magazine, ‘Synthetic Media: The Real Trouble with Deepfakes’

- Consumer Reports, Fight Against Coronavirus Misinformation Shows What Big Tech Can Do When It Really Tries

- The Guardian, “What are deepfakes – and how can you spot them?”

- Quartz, “Facebook’s deepfake ban ignores most visual misinformation”

- The Cube, “Does Facebook’s new policy on ‘deepfake’ videos go far enough?”

- Pocono Community Pharmacy, “Kamagra pills online” Harry Wallace, RPH

- Afternoons with Rob Breakenridge, “US-Iran tensions; Facebook to ban deepfakes; failing grade for CRA”

- Business Insider, “Facebook just banned deepfakes, but the policy has loopholes — and a widely circulated deepfake of Mark Zuckerberg is allowed to stay up”

- WIRED, “Facebook’s Deepfake Ban Is a Solution to a Distant Problem”

- Associated Press, “Facebook bans deepfakes in fight against online manipulation”

- The New York Times, “Facebook Bans Deepfakes in Fight Against Online Manipulation”

- VICE, “Facebook Takes a Stand on Political Deepfakes, a Problem That Doesn’t Exist”

- Cointelegraph, “Deep Truths of Deepfakes — Tech That Can Fool Anyone”

- Cointelegraph, “Blockchain Might Be a Silver Bullet for Fighting Deepfakes”

- Usbek & Rica, « Avec les deepfakes, n’importe qui peut devenir un démon de la manipulation »

- Boston Globe, “Deepfakes are getting better. Should we be worried?”

- European Science-Media Hub, “A scientist’s opinion : Interview with Sam Gregory about Deepfakes”

- European Science-Media Hub, “Deepfakes, shallowfakes and speech synthesis: tackling audiovisual manipulation”

- Euronews, “Do deepfakes have the power to rewrite history?”

- TransAfrica Radio, “AdebayOkeowo And SamGregory – On THE GET UP with T.Y”

- Power 3.0, “Demystifying Deepfakes: A Conversation with Sam Gregory”

- Immerse, “Weapons of Perception”

- WFMJ-TV, “Legislation passed to better spot deepfakes”

- Euronews, “Twitter wants your help to combat deepfakes”

- MIT Technology Review, “The biggest threat of deepfakes isn’t the deepfakes themselves”

- BBC, “Deepfake videos ‘double in nine months'”

- CNN, “The number of deepfake videos online is spiking. Most are porn”

- WIRED, “Prepare for the Deepfake Era of Web Video”

- MIT Technology Review, “Three threats posed by deepfakes that technology won’t solve”

- WIRED, “Even the AI Behind Deepfakes Can’t Save Us From Being Duped”

- Clarín, “Deepfakes: chantajes y mentiras en el videoclub”

- UN Web TV, “Disinformation and the Media – SDG Media Zone, High-Level Week, 74th session of the UN General Assembly (25 September 2019)”

- Het Financieele Dagblad, “Deepfakepionier: ‘We hebben een probleem'”

- Revista G7, “(Des) Inform.AR, Una Conferencia Internacional Sobre Desinformación”

- Euronews, “French charity publishes deepfake of Trump saying ‘AIDS is over'”

- WIRED, “Forget Politics. For Now, Deepfakes Are for Bullies”

- RTÉ Radio 1, “Drivetime”

- MIT Technology Review, “The world’s top deepfake artist is wrestling with the monster he created”

- NewsBusters.org, “Axios: Social Media Firms Might Police Speech to Protect ‘Truth’”

- Axios Future, “1 big thing: Social media and the truth”

- Fortune, “Fighting Deepfakes Gets Real”

- Aftenposten, “Det foregår et kappløp om sannheten, og sannheten henger etter”

- Axios, “A digital breadcrumb trail for deepfakes”

- CNET, “VidCon kicks off with deepfake dilemma as its opening act”

- BBC, “VidCon: Liza Koshy, Joey Graceffa and LD Shadowlady join huge YouTube convention”

- MIT Technology Review, “

- Journalist’s Resource, “Deepfake technology is changing fast — use these 5 resources to keep up”

- CNN, “Baby Elon Musk, rapping Kim Kardashian: Welcome to the world of silly deepfakes”

- The Washington Post, “Deepfakes are dangerous — and they target a huge weakness”

- OpenAI, “The National Security Challenges of Artificial Intelligence, Manipulated Media, and ‘Deep Fakes’”

- CATO Institute, “Artificial Intelligence and Counterterrorism: Possibilities and Limitations”

- Universo Online, “A proliferação de deepfakes é apenas uma questão de tempo”

- Pocono Community Pharmacy, “Cenforce 100mg” Harry Wallace, RPH

- The Washington Post, “Top AI researchers race to detect ‘deepfake’ videos: ‘We are outgunned’”

- CNN, “The fight to stay ahead of deepfake videos before the 2020 US election”

- MIT Technology Review, “Deepfakes have got Congress panicking. This is what it needs to do.”

- Fortune, “Deepfake Video of Mark Zuckerberg Goes Viral on Eve of House A.I. Hearing”

- Axios, “1 big thing: Big Tech’s untenable deepfake defense”

- VICE, “There’s No ‘Correct’ Way to Moderate the Nancy Pelosi Video”

- Mozilla Internet Health Report 2019, “‘Deepfakes’ are here, now what?”

- MIT Technology Review, “Deepfakes are solvable—but don’t forget that ‘shallowfakes’ are already pervasive“

- Al Jazeera, The Stream, “Would you be fooled by a deepfake?“

- ABA Journal, “As deepfakes make it harder to discern truth, lawyers can be gatekeepers”

- World Economic Forum, “Heard about deepfakes? Don’t panic. Prepare”

- Gizmodo, “How Archivists Could Stop Deepfakes From Rewriting History”

- National Endowment for Democracy, “The Big Question: How will ‘Deepfakes’ and Emerging Technology Transform Disinformation?”

- Harvard Business Review, “Business in The Age of Computational Propaganda and Deep Fakes”