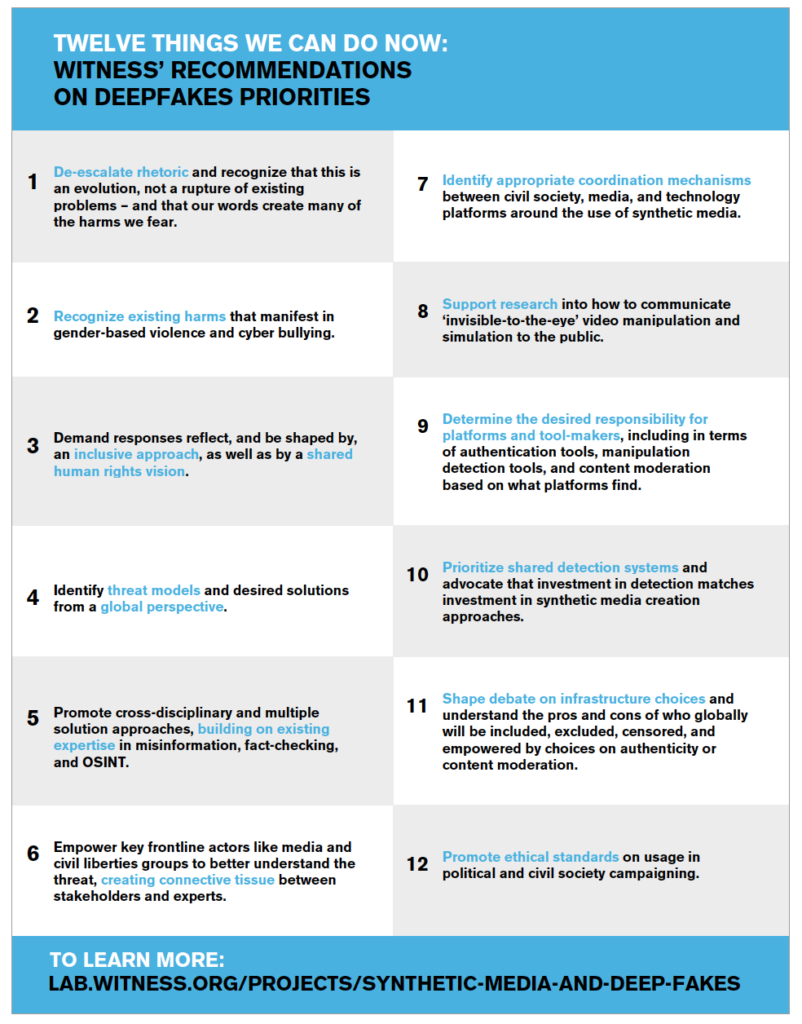

TICKS OR IT DIDN’T HAPPEN*

CONFRONTING KEY DILEMMAS IN AUTHENTICITY INFRASTRUCTURE FOR MULTIMEDIA

*”Ticks” is a British English word for checkmarks.

December 2019

For further information or if you are interested in participating in ongoing work in this area, contact WITNESS Program Director Sam Gregory: sam@witness.org

Dilemma 1

Who might be included and excluded from participating?

Dilemma 2

The tools being built could be used to surveil people

Dilemma 3

Voices could be both chilled and enhanced

Dilemma 4

Visual shortcuts: What happens if the ticks/checkmarks don’t work, or if they work too well?

Dilemma 5

Visual shortcuts: Authenticity infrastructure will both help and hinder access to justice and trust in legal systems

Dilemma 6

Technical restraints might stop these tools from working in places they are needed the most.

Dilemma 7

News outlets face pressure to authenticate media

Dilemma 8

Social media platforms will introduce their own authenticity measures

Dilemma 9

Data storage, access and ownership – who controls what?

Dilemma 10

The technology and science is complex, emerging and, at times, misleading

Dilemma 11

How to decode, understand, and appeal the information being given

Dilemma 12

Those using older or niche hardware might be left behind

Dilemma 13

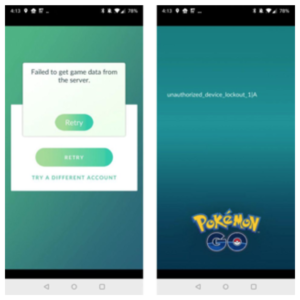

Jailbroken or rooted devices will not be able to capture verifiable audiovisual material

Dilemma 14

If people can no longer be trusted, can blockchain be?

Why WITNESS prepared this report:

A background

WITNESS helps people use video and technology to protect and defend human rights. A key element of this work is ensuring that people have the skills, tools and platforms to enable them to safely and effectively share trustworthy information that contributes to accountability and justice. Both our work on enhancing people’s skills to document video as evidence and our work with the Guardian Project developing tools such as ProofMode are part of this effort. Our tech advocacy toward platforms such as Google and Facebook contributes to ensuring they serve the needs of human rights defenders and marginalized communities globally.

Within our Emerging Threats and Opportunities work, WITNESS is focused on proactive approaches to protecting and upholding marginalized voices, civic journalism, and human rights as emerging technologies such as AI intersect with disinformation, media manipulation, and rising authoritarianism.

The opportunity: In today’s world, digital tools have the potential to increase civic engagement and participation – particularly for marginalized and vulnerable groups – enabling civic witnesses, journalists, and ordinary people to document abuse, speak truth to power, and protect and defend their rights.

The challenge: Bad actors are utilizing the same tools to spread misinformation, identify and silence dissenting voices, disrupt civil society and democracy, perpetuate hate speech, and put individual rights defenders and journalists at risk. AI-generated media in particular has the potential to amplify, expand, and alter existing problems around trust in information, verification of media, and weaponization of online spaces.

New forms of AI-enabled media manipulation:

Over the past 18 months, WITNESS has led a focused initiative to to better understand what is needed to better prepare for potential threats from deepfakes and other synthetic media. WITNESS has proactively addressed the emerging threat of deepfakes and synthetic media, convening the first cross-disciplinary expert summit to identify solutions in June 2018; leading threat-modelling workshops with stakeholders; publishing analyses and surveys of potential solutions; and pushing the agenda in closed door meetings with platforms as well as within the US Congress.

Our approach: WITNESS’ Emerging Threats and Opportunities program advocates for strong, rights respecting technology solutions and ensures that marginalized communities are central to critical discussions around the threats and opportunities related to emerging technologies.

WITNESS is connecting researchers and journalists, as well as laying out the map for how human rights defenders and journalists alike can be prepared to respond to deepfakes and other forms of synthetic media manipulation (see our key recommendations below). We have particularly focused on ensuring that all approaches are grounded in existing realities of harms caused by misinformation and disinformation, particularly outside the Global North, and are responsive to what communities want.

WITNESS has emphasized learning from existing experience among journalists and activist communities that deal with verification, trust, and truth, as well as building better collaborations between stakeholders to respond to this issue. The stakeholders include key social media, video-sharing and search platforms, as well as the independent, academic and commercial technologists developing research and products in this area.

WITNESS engages in strategic discussions, planning, and advocacy with a range of actors including tech companies, core researchers, journalists, activists, and underrepresented communities, building on existing expertise to push forward timely, pragmatic solutions. As with all of WITNESS’ work, we are particularly focused on including expertise and measures from a non-U.S./Western perspective, and with a focus on listening to journalists, disinformation experts, human rights defenders, and vulnerable communities in the Global South to avoid repeating mistakes that were made in earlier responses to disinformation crises. This includes a recent first national multi disciplinary convening to discuss these issues and prioritize preferred solutions in Brazil. A comprehensive list of our recommendations and our reporting is available here, and have been covered in the Washington Post, Folha de São Paulo, CNN, and MIT Technology Review as well as many other outlets. Upcoming expert meetings will bring the prioritization of threats and solutions to Southern Africa and Asia.

WITNESS is also co-chairing the Partnership on AI’s (PAI) Expert Group on Social and Societal Influence, which is focused on the challenges of AI and the media. As part of this, we co-hosted a convening with PAI and BBC in June 2019 about protecting public discourse from AI-generated mis/disinformation, and are participating in the PAI Steering Committee on Media Integrity.

Executive Summary

In late 2013, WITNESS and Guardian Project released a new app called InformaCam. It was designed to help verify videos and images by gathering metadata, tracking image integrity and digitally signing pieces of media taken with the app. Six years later, both the need for and awareness of apps like this have boomed, and InformaCam, now known as ProofMode, has been joined by a host of other apps and tools, often known as ‘verified-at capture’ or ‘controlled capture’ tools. These tools are used by citizen journalists, human rights defenders and journalists to provide valuable signals that help verify the authenticity of their videos.

What we didn’t predict back in 2013 is the increasing weaponization of online social media and calls of “fake news” creating an ever-increasing demand for these types of authentication tools. WITNESS has tracked these developments, and over the past eighteen months has focused on how to better prepare for new forms of misinformation and disinformation such as deepfakes. Now that synthetic media and deepfakes are becoming more common, and the debate on “solutions” is heating up, pressure is mounting for a technical fix to the deepfakes problem, and verifiedat- capture technologies, like Guardian Project’s 2013 authentication tools, are being heralded as one of the most viable solutions to help us regain our sense of visual trust. The idea is that if you cannot detect deepfakes, you can, instead, authenticate images, videos and audio recordings at their moment of capture.

When WITNESS hosted the first cross-disciplinary expert convening on responses to malicious deepfakes in June 2018, bringing together a range of key participants from technology, journalism, human rights, cybersecurity and AI research to identify risks and responses one of the top recommendations of those present was to do focused research to better frame trade-offs and dilemmas in this area as it started to rise to prominence. This report is a direct output of this expert recommendation.

“Baseline research … on the optimal ways to track authenticity, integrity, provenance and digital edits of images, audio and video from capture to sharing to ongoing use. Research should focus on a rights-protecting approach that a) maximizes how many people can access these tools, b) minimizes barriers to entry and potential suppression of free speech without compromising right to privacy and freedom of surveillance c) minimizes risk to vulnerable creators and custody holders and balances these with d) potential feasibility of integrating these approaches in a broader context of platforms, social media and in search engines. This research needs to reflect platform, independent commercial and open-source activist efforts, consider use of blockchain and similar technologies, review precedents (e.g. spam and current anti-disinformation efforts) and identify pros and cons to different approaches as well as the unanticipated risks. WITNESS will lead on supporting this research and sprint.”

The consensus need to focus on this has been validated by the continuing growth of work in this area in the past year, both as a direct solution for deepfakes and also a response to broader ‘information disorder’ issues. Outside of deepfakes, a range of stakeholders are facing pressure to better validate their media, or see competitive advantage at pursuing options in this area – for example, with the recent launch of projects such as the News Provenance Project and the Content Authenticity Initiative from companies like the New York Times, Twitter and Adobe.

At WITNESS, we believe in the capacity of verified-at capture tools and other tools for tracing authenticity and provenance over time to provide valuable validation of content in a time where challenges to trust are increasing. However, if these solutions are to be widely implemented, in the operating systems and hardware of devices, in social media platform the potential to change the fabric of how people communicate, inform what media is trusted, and name who gets to decide. This report looks at the challenges, consequences, and dilemmas that might arise if this technology were to become a norm. What seems to be a quick, technical fix to a complex problem could inadvertently increase digital divides, and create a host of other difficult, complex problems.

Robert Chesney and Danielle Citron propose a similar idea for public figures who are nervous they will be harmed by hard to detect deepfakes They could use automatic alibi services to lifelog and record their daily activities to prove where and what they were doing at any given time. This approach to capturing additional data about the movements of public figures is not too far beyond adding additional data to each image, video, and audio recording. Both solutions have companies govern large amounts of personal data, encourage the adoption of a disbelief-by-default culture, and enable those with the most power and access to engage with these services.

Within the community of companies developing verified-at-capture tools and technologies, there is a new and growing commitment to the development of shared technical standards. As the concept of more thoroughly tracking provenance gains momentum, it is critical to understand what happens when providing clear provenance becomes an obligation, not a choice; when it becomes more than a signal of potential trust, and confirms actual trust in an information ecosystem. Any discussion of standards – technical or otherwise – must factor in consideration of these technical and socio-political contexts.

This report was written by interviewing many of the key companies working in this space, along with media forensic experts, lawyers, human rights practitioners and information scholars. After providing a brief explanation of how the technology works, this report focuses on 14 dilemmas that touch upon individual, technical and societal concerns around assessing and tracking the authenticity of multimedia. It focuses on the impact, opportunities, and challenges this technology holds for activists, human rights defenders and journalists, as well as the implications for society-at-large if verified-at-capture technology were to be introduced at a larger scale.

Dilemmas 1, 2 and 3 focus on different aspects of participation. Who can participate? How and what are the consequences of both opting in and opting out? If every piece of media is expected to have a tick (or “checkmark” in American English) signaling authenticity, what does it mean for those who cannot or do not want to generate one? Often under other forms of surveillance already, many human rights defenders and citizen journalists documenting abuses within authoritarian regimes might be further compromising their safety when they forfeit their privacy to use this technology so they can meet increased expectations to verify the content they are capturing.

Dilemma 4 looks at visual shortcuts. It could easily be imagined that color systems, tags such as “Disputed” or “Rated False,” or simply a tick/checkmark or a cross that indicates to the user what is “real” or “fake” could be implemented across social media platforms. However, there are various concerns with this approach. In this dilemma we explore issues such as verifying media that is “real” but used in misrepresentative contexts, and visual cues denoting verification that could be taken as signs of endorsement.

In Dilemma 5 we assess the implications that higher expectations of forensic proof might have on legal systems and access to justice in terms of resources, privacy and societal expectations. Visual material is highly-impactful when displayed in courts of law, and in most jurisdictions has a relatively low bar of admissibility in terms of questions around authenticity and verifiability. We must now ask how and if this will change with the actual and perceived increase of synthetic media and other new forms of video and audio falsification, and in what direction it will go, both in courtrooms used to photos and videos as evidence, as well as other judicial systems, where it is as yet novel.

Many of the concerns around deepfakes and synthetic media are focused on scale, in terms of creation and dissemination. It is through the lens of scale that we look at the next four dilemmas, and focus upon how to respond effectively to information disorder and mischaracterized content without amplifying, expanding or altering existing problems around trust in information and the weaponization of online spaces while preserving key values such as open internet, unrestricted access, freedom of expression and privacy.

Dilemma 6 focuses on issues that journalists and citizens who are documenting human rights violations and want to ensure the verifiability of their content might face in making this technology work for them. If those who want or are expected to verify their material face technical challenges that prevent them from using the authenticity tools assessed in this research, how will their material be treated in courts of law, by news outlets, and by social media platforms? This could create a system in which those relying on less sophisticated technology cannot produce images and videos that are accepted as “real.”

The integration of verified-at-capture technology technology within social media platforms and news outlets is the focus of Dilemmas 7 and 8. Media and news outlets are facing pressure to authenticate media. The rising expectation is that they ensure that both the media they source from strangers as well as the media they produce and publish in-house is resilient to falsifications, and that they assess all user generated content included in their reporting. Media outlets are concerned not only about their brand and reputation, but also about the larger societal impact this might have around trust in journalism, and the potentially disastrous consequences of reporting that is compromised by misinformation and disinformation. Related sub-challenges include liability concerns and the struggle of smaller platforms to keep up, both of which are explored within Dilemma 7.

Social media and messaging platforms are the key delivery mechanism for manipulated content, and provide a platform for those who want to consume and access such content. It is likely that — due to both external pressures such as regulatory, legislative and liability concerns and changes, and internal pressures, such as maintaining and increasing levels of user engagement — social media platforms, messaging apps and media outlets will introduce their own authenticity multimedia measures and apply more rigorous approaches to tracking provenance. These measures, if introduced, will immediately scale up the perceived need for authenticating multimedia, as well as raise awareness about the risks and harms that could accompany such changes. We discuss these measures in Dilemma 8.

The remainder of the dilemmas laid out in this paper focus specifically on how the technology works and, in some cases, doesn’t work. Dilemma 9 discusses one of the most critical aspects of this report: how collected data is stored. For many working on sensitive human rights issues, as well as those suspicious of platform surveillance and/or their own governments, how data is being treated, stored, deleted, and accessed, and how future changes will be accounted for, are key considerations. Alongside this, Dilemma 9 considers a number of legal, regulatory and security threats and challenges.

The field of media forensics has only developed over the last two decades, and until recently, was still considered to be a niche field. Media forensics is not only a new field, but a disputed one. In Dilemma 10, we look at a number of complications with the proposed technology, and consider a number of known ways that bad actors could trick the authentication process. Dilemma 11 then focuses on the ability of interested parties to review and, if necessary, appeal decisions and processes made by companies who have a financial interest in keeping these processes hidden. Many of the elements in media forensics are not easily readable to nonexperts, and as with other processes, particularly those driven by algorithms, machine learning or AItechnologies, there is a critical need for people who are able to scrutinize them for errors and appeal poor decisions. If verified-at-capture technology is to be of use in helping interested members of the public make informed decisions on whether they can trust the media they are viewing, the data it collects must be easily comprehensible.

Both Dilemma 12 and Dilemma 13 address the problem of devices that are not able to use verifiedat- capture technologies. The basic underlying technology to create a system or set of systems for image and video authentication is still being developed, and Dilemma 12 discusses how so far, it does not account for those with limited bandwidth and GPS, or for those using legacy devices,. Dilemma 13 looks at those who are using jailbroken and rooted devices, and how this may hinder or even bar their ability to capture verifiable audio visual material. Many verified-at-capture tools use GPS location as an indicator that a piece of multimedia content is authentic, and need to account for the risk that jailbroken or rooted devices might have a GPS spoofer installed. The authenticity tools also rely on being able to assess the integrity of the device capturing the media, and cannot guarantee the integrity of a jailbroken or rooted device. If the expectation to use these tools in order to produce trustworthy content does scale globally, then it is essential that those who are using altered operating systems on their devices are not automatically discounted. For the last dilemma, we look to blockchain technologies. Many companies interviewed for this report integrated blockchain technologies into their authentication tools to create a ledger of either the hash or the timestamp, or in some cases, both. People are being asked with increasing frequency to transfer their trust from human networks to technological networks, into tools built and implemented by computer scientists and mathematicians, one of which is blockchain. Dilemma 14 explores how blockchain is being used to verify media, and whether it can be trusted.

This report is by no means inclusive of all the intricacies of verifying media at capture, and as this is a rapidly changing and growing field, it is likely that much of the technicalities discussed within this report will soon change. These technologies are offering options to better prove that a picture, video or audio recording has been taken in a particular location, at a particular time. This technology has the potential to be a tool that helps to create better quality information, better communication, greater trust and a healthier society in our shifting cultural and societal landscape. To do this, verified-at-capture technology needs to be developed in a way that it will be seen as a signal rather than the signal of trust, and that will allow people to choose to opt-in or out without prejudice, granting them the option to customize the tools based on their specific needs.

Introduction

In 1980, David Collingridge laid out the following double-bind quandary in his book, The Social Control of Technology, to describe efforts to influence or control the further development of technology:

1. An information problem: Impacts cannot be easily predicted until the technology is extensively developed and widely used.

2. A power problem: Control or change is difficult when the technology has become entrenched.

This quandary became known as the Collingridge Dilemma.

The Collingridge Dilemma goes to the heart of this report. There is a growing sense of urgency around developing technical solutions and infrastructures that can provide definitive answers to whether an image, audio recording or video is “real” or, if not, how it has been manipulated, re-purposed or edited since the moment of capture. Technologies of this type are currently being developed and used for a handful of different purposes, such as verifying insurance claims, authenticating the identity of users on dating sites, and adding additional veracity to newsworthy content captured by citizen journalists. There are indicators that these technologies are about to reach primetime, and if they are widely implemented into social media platforms and news organizations, they have the potential to change the fabric of how people can communicate, to inform what media is trusted, and even endow certain parties with the power to decide what is, or is not, authentic.

This report is designed to review some of the impacts that extensive use of this technology might have in order to potentially avoid Collingridge’s second quandary. We reviewed the technologies being proposed and conducted 21 in-depth interviews with academics, entrepreneurs, companies, experts and practitioners who are researching, developing or using emerging, controlled-capture tools. This report reflects on platform, commercial and open-source activist efforts, considers the use of technologies such as blockchain, and identifies both the opportunities and challenges of different approaches, as well as the unanticipated risks of pursuing new approaches to image and video authentication and provenance. At the heart of this paper are the following questions: Is this the system that we want? And who is it designed for?

In this paper, we discuss and assess the individual, technical and societal concerns around assessing and tracking the authenticity of multimedia. This paper is designed to address a number of dilemmas associated with building and rolling out technical authenticity measures for multimedia content, and encourages a considerate, well thought-out and non-reactive approach. As the Collingridge Dilemma articulates, once these solutions are integrated within the hardware and operating systems of smartphones, social media platforms and news organizations, it becomes difficult, if not impossible, to roll back some of the decisions and the implications they have for society as a whole. To quote Collingridge, “When change is easy, the need for it cannot be foreseen; when the need for change is apparent, change has become expensive, difficult, and time-consuming.”

If these infrastructures are to be prematurely implemented as a quick-fix response to a deepfakes problem that does not yet exist, then the current hype and concerns over deepfakes will have helped forge a future that would not have existed otherwise, in terms of legislative, regulatory or societal changes, introducing a whole host of other complex problems. As written by Robert Chesney and Danielle Citron, “Careful reflection is essential now, before either deepfakes or responsive services get too far ahead of us.” With much of the work WITNESS carries out on deepfakes and synthetic media, the overriding message is one of “Prepare, don’t panic.” If this report were to build on this message, it would say “Prepare, don’t panic, but don’t over prepare, either.”

Overview of current “controlled capture” and “verified-at-capture” tools

In the wake of growing concerns over the spread of deepfakes and synthetic media, controlled-capture tools are not only being proposed by start-ups and companies as a solution to authenticating media, but are also being considered by regulatory bodies, social media platforms, and news outlets as a potential technical fix to a complicated problem. Although most of the tools discussed in this report are currently under development by start-ups and non-profits, and are based on software and apps, these technologies and related technologies of authenticity and verified provenance are also starting to be developed within news and media outlets and by social media companies themselves. Many of the players already established in this space also aspire to integrate their approach into phones and devices at the chip and sensor level, or possibly into the operating system, as well as into social media and audiovisual media sharing platforms.

In this report we spoke to a variety of tool developers and companies at different stages of development of technologies in this area, from those with fully fledged, market-ready tools to those that were still in their infancy stage, with tools still in the planning stage. These tools are described typically as “controlled-capture” or “verified-at-capture” tools. This next section provides an overview of the current technologies being proposed.

The general idea

Image, video and audio recordings each share similar characteristics – a moment of creation, the possibility of edits, and the capacity to be digitally reproduced and shared. In a nutshell, with controlled capture, an image, video or audio recording is cryptographically signed, geotagged, and timestamped. The idea behind verified capture is that in order to verify quickly, consistently and at scale, the applications on offer need to be present at the point of capture. Dozens of checks are performed automatically to make sure that all the data lines up and corroborates, and that whoever is recording the media isn’t attempting to fake the device location and time-stamp. The hash the media gets assigned is unique, and is based on the various elements of the pieces of data being generated. If you compare this hash with another image to see if it was an original image or not, then the test would be rerun, and if one element of the test (the time, date, location or pixelation of an image) has been changed, then the hash will not match.

While this is not a bulletproof approach, and is certainly vulnerable to sophisticated attacks, the ultimate goal for many of the tool developers is to add forensic information to this cybersecurity solution, looking at lighting, shadows, reflection, camera noise, and optical aberrations and to deploy increasing levels of computer vision and AI to detect such problems as someone taking a video of an existing video or a photo of an existing photo. This goal, according to media forensics expert Hany Farid1, is years down the line due to the complexity of many of these techniques. In the sections below, we explore some of the elements common to many controlled-capture tools including hashing, signing, use of media forensics and access to the device camera.

Hashing and signing

Hashing and signing are cryptographic techniques.

Hashing is a cryptographic technique that involves applying a mathematical algorithm to produce a unique value that represents any set of bytes, such as a photo or video. We use different types of hashing techniques in our everyday interactions online. For example, when you enter your password into a website, this website doesn’t want to store your password on its servers so instead, it applies a calculation on it and converts your password into a unique set of characters, which it saves.

This technique can be similarly used for video, image and audio recordings. As written by James Gong “like any digital file, a video is communicated to computers in the form of character-based code, which means the source code of a video can be hashed. If I upload a video and store its hash on the blockchain, any subsequent changes to that video file will change the source code, and thus change the hash. Just as a website checks your password hash against the hash it has stored whenever you log in, a video site could check a video’s upload hash against the original to see if it had been modified (if the original was known).” This means that the hash value of the original video can be checked against the value of the video being seen somewhere else, and if the video you are checking has a different hash number, then one of them has been edited. There are other approaches to hashing multimedia content, such as perceptual hashing (a method often used in copyright protection to detect near matches), which includes hashing either every frame of a video or regular intervals of frames, or hashing subsections of an image. These hashing techniques help detect manipulation, such as whether an image was cropped, and help identify and verify subsets of edited footage.2

Signing, in this context, uses the process of public key encryption, and uses keys that can be linked to a person, device or app, to authenticate who or which device created the file. For instance, to determine the device that a piece of media originated on, someone could compare the PGP identity and device ID. Then, to verify the data integrity, they could compare the PGP signatures with the SHA256 hash.

Media forensics (“looking for the fingerprints of a break-in”)

Many of the approaches involved in verified-at-capture technologies such as hashing and signing derive from cybersecurity practices. However, there are a number of media forensic techniques, such as flat surface detection (used to detect someone taking a video or photo of an existing image), involved in some of the commercial offerings.

When discussing these tools and approaches with Dr. Matthew Stamm, he likened it to looking for fingerprints during a break-in.3 Typically, these approaches are looking at where the multimedia signal comes from, and whether it has been processed or altered, to be able to answer questions about the source, processing history, and authenticity of the media content. This is done through signal processing, such as looking for fingerprints left by a particular type of camera, or fingerprints created by an image editor or image processing algorithm.

Due to the rapid development in machine learning and deep learning over the past five years (it used to take two to three years to develop one particular forgery detector), now, if machines are fed the right amount of training data, they can quickly learn how to detect many editing operations at once, leading to a more efficient and robust detection process. Most of the development within this timeline is in image and video, although it is increasingly trickling into audio. And as Dr. Matthew Stamm notes, many of the deep-learning techniques being developed can easily be transferred over to audio.

Cameras and microphones

Each smartphone camera has a lens, as well as a sensor that sees what the lens sees and turns it into digital data, along with software that takes the data and turns it into an image file. Additionally, smartphones might have multiple microphones.

Having access to both cameras and microphones, app developers can use an API provided by the operating system of a device to create a channel between the camera and microphone hardware and the external software. For example, WhatsApp, owned by Facebook, will use the API provided by the devices’ operating system to allow WhatsApp to talk to the phone’s camera and microphone.

There are two central issues with this system when it comes to authenticating media. The first is that the time lapse created when the operating system communicates with the app’s API, even if it is only a nanosecond, is enough time for an adversary to insert fake content into the app. The second is that some of the apps discussed in this report only capture additional metadata when a user is taking a picture from within the app itself. This means that if you were to accidentally use your smartphone’s camera instead of the app, the image would not be authenticated.

Overview of the tools and applications reviewed

We spoke with seven companies and tool developers creating controlled-capture technology. Some of the tools have been in operation for a number of years, with others just entering the development phase. Below is a list of the tools assessed for this project, along with a short description taken from each tool’s website at the time of this report’s completion (October 2019). This list is by no means inclusive of all the tools being developed, but provides a good representative range.

Commercial offerings:

- Amber Video: “Amber Detect uses signal processing and artificial intelligence to identify maliciously-altered audio and video, such as that of deepfakes, and which is intended to sow disinformation and distrust. Detect is for customers who need to analyze the authenticity of videos, the source of which is unknown. Amber Authenticate fingerprints recordings at source and tracks their provenance using smart contracts, from capture through to playback, even when the video is cut and combined. Authenticate is for multistakeholder situations, such as with governments and parts of the private sector, and creates trustlessness so that no party has to trust each other (or Amber): parties can have unequivocal confidence with an immutable yet transparent blockchain record.”

- eWitness: “How can we protect truth in a world where creating fake media with AI techniques is child’s play? eWitness is a blockchain backed technology that creates islands of trust by establishing the origin and proving the integrity of media captured on smart-phones and cameras. With eWitness, seeing can once again be believing.”

- Serelay: “Serelay Trusted Media Capture enables any mobile device user to capture photos and videos which are inherently verifiable, and any third party that receives them to query authenticity of content and metadata quickly, conclusively and at scale.”

- Truepic: “Truepic is the leading photo and video verification platform. We aim to accelerate business, foster a healthy civil society, and push back against disinformation. We does this by bolstering the value of authentic photos and videos while leading the fight against deceptive ones.”4

Open-source apps:

- ProofMode: “ProofMode is a light, minimal “reboot” of our full-encrypted, verified secure camera app, CameraV. Our hope was to create a lightweight, almost invisible utility that runs all the time on your phone, and automatically embeds data in all photos and videos to serve as extra digital proof for authentication purposes. This data can be easily and widely shared through a “Share Proof” share action.”

- Tella: “Tella is a documentation app for Android. Specifically designed to protect users in repressive environments, it is used by activists, journalists, and civil society groups to document human rights violations, corruption, or electoral fraud. Tella encrypts and hides sensitive material on your device, and quickly deletes it in emergency situations; and groups and organizations can deploy it among their members to collect data for research, advocacy, or legal proceedings.

Specialized tools:

- eyeWitness to Atrocities: “eyeWitness seeks to bring to justice individuals who commit atrocities by providing human rights defenders, journalists, and ordinary citizens with a mobile app to capture much needed verifiable video and photos of these abuses. eyeWitness then becomes an ongoing advocate for the footage to promote accountability for those who commit the worst international crimes.

So, what are some of the differences between the tools?

Design

- All except TruePic are currently free tools, or offer a free version

- Most of the tools work only in English. Some tools are open-source, others are closed-source.

- Most are designed for smartphones and primarily work on Android operating systems. The more established companies have an iOS app.

- One of the tools focuses away from smartphones and more on integrating their technology into bodycams and cameras.

Capture

- All gather GPS location and available network signals such as WiFi, mobile, and IP addresses at point of capture.

- Most gather all available device sensor data such as altitude, the phone’s set country and language preferences, and device information such as the make, model, unique device ID number, and screen size at point of capture. Most tools uses some kind of signing technology of media (such as PGP) at the time of capture.

- Most tools generate a SHA256 hash.

- Most use proprietary algorithms to automatically verify photos, videos and audio recordings. Most of the tools do not require mobile data or an internet connection to create digital signatures and gather sensor data.

- Most tools have no noticeable impact on battery life or performance. Some apps will still work on rooted or jailbroken devices, but others will disable the verification and the media will get written to the regular camera.

- Some tools work in the background and add extra data to media captured through the phone’s camera. Other tools only work when the media is captured using the app itself.

- Some of the apps allow users to camouflage the app, picking a name and icon of their choice, such as a calculator, a weather app or a camera app icon.

Sharing and storage

- Some of the tools have a visual interface that offers users details such as the date, time and location of the image if the user selects to share it.

- Some companies and organizations store data only on their servers while others store data only on users’ devices.

- Some tools integrate blockchain technology to create a ledger of the hash, the timestamp, or in some cases, both.

- Two of the apps have an option that wipes all data in the app when a particular button is triggered, after which the app will uninstall itself.

- One of the tools enables users to choose how much specificity of location they want to share, such as within 10 meters, within the city, or no geolocation whatsoever.

RELATED DILEMMAS

Dilemma 5

Authenticity infrastructure will both help and hinder access to justice and trust in the legal system.

Dilemma 1:

Who might be included and excluded from participating?

“If it weren’t for the people, the god-damn people” said Finnerty, ”always getting tangled up in the machinery. If it weren’t for them, the world would be an engineer’s paradise.”

The aspiration of many of the technical tools analyzed within this report is to become an integral part of the centralized communication infrastructure. Many of their catchy taglines suggest an aspiration to be the technical signal that can determine if an image can be trusted or not: “a truth layer for video;” “fake video solved;” “secure visual proof;” and “restoring visual trust for all.” However, through our interviews with the companies and civil society initiatives, it is clear they are not aiming to set an exclusionary standard in which a user would not be trusted unless they carry out all of the steps in authenticating a piece of media. Rather, they are working to develop a system where people can choose to add more signals to additionally verify their media. This intention, however, might not end up as reality if regulatory bodies or society at large come to expect these technical signals in order to trust visual and audio material.

If every piece of media is expected to have a tick/checkmark signaling authenticity, what does it mean for those who cannot or do not want to generate one? As Zeynep Tufekci wrote in February 2018, “We need to make sure verification is a choice, not an obligation.”’

Verification technology can, as Kalev Leetaru wrote for Forbes, “offer a relatively simple and low-cost solution that could be readily integrated into existing digital capture workflows to authenticate video as being fully captured in the ‘real world’ to restore trust.” However, under the digital hood there are questions over whether this seemingly simple technology works as well as people hope it does. Those who use older smartphones, who don’t speak English, or have spotty internet or GPS might not be able to get this technology to work for them. Often the most critical videos, images and audio recordings, which are essential to authenticate, originate in places where circumstances are dangerous and stressful, connectivity is limited, or technology is older or must be hacked in order for it to work.

While these applications and tools can be both useful and necessary for those who want to authenticate their media, it is important to be cautious about implementing a technical structure that reinforces power dynamics that intertwine our ideas about who is considered truthful with who has access to technology. For instance, one of the tools in this industry, Amber Authenticate, works mainly with law enforcement in the United States to integrate their technology within the body cams of police officers. The footage captured by these officers gathers additional signals of trust and hashes the footage directly onto the blockchain.5 However, this results in a police officer having access to technology that would authenticate their claims whereas a protester, for example, would not have access to the same technology, and would therefore be less able to authenticate the media they were collecting. There are not just technical restraints to consider, but also environmental ones. Many of the places and instances that need these tools the most are also stressful and dangerous environments, where images and videos that push back against state sponsored narratives will be less likely to be believed, and more likely to have doubt cast upon them. Those documenting abuses could forget to use a particular app they are “expected” to use, could be unaware that they are capturing something of significance, could use a burner phone, or might avoid using a verified-at-capture app at all because of the danger posed by being caught with such an app on their device.

The experiences of the human rights-focused app Tella illustrate this quandary. In the past, Tella allowed users to capture photos and videos using the default camera app as well as the Tella app. Both capture the additional metadata, but the default camera app stores this metadata unencrypted while the Tella app both stores and encrypts the metadata, hiding the image or video away from the user’s main camera roll. There is a tradeoff here: either the user does not capture any metadata if they accidentally use their default camera app, or they do capture it, but it is stored unencrypted, so is viewable by anyone who gains access to the device. Tella’s partners expressed concern over these security risks, and in response, Tella disabled the functionality that allowed users to capture additional metadata when using their default camera app (while planning to implement a solution to address this).6

In order to avoid a disproportionate “ratchet effect,” whereby the adoption of a new technology raises the bar both technically and practically for people who cannot afford such a risk, it is essential to consider how this technology will protect people in threatening and stressful situations, like dissidents, who may need to hide their identity or revoke information that later puts them in danger. If not, at-risk journalists and activists might not be able to fully participate in this new ecosystem. As Sam Gregory notes, these are the people who have spent decades being told they are “fake news” before that became a buzzword, and now run the risk of these “technologies of truth” being used to delegitimize their work.

QUESTIONS TO CONSIDER

- Tool developers and designers: How to design for those operating under stressful situations?

- Tool developers: How can these tools be used by those with limited access to WiFi and GPS, or those using legacy devices? How can those who are capturing media in these environments be involved and included in the design process?

RELATED DILEMMAS

Dilemma 6

Technical restraints might stop these tools from working in places they are needed the most.

Dilemma 2:

The tools being built could be used to surveil people

In 2017, Reality Winner was arrested on suspicion of leaking information concerning the potential Russian interference in the 2016 United States elections to the news outlet The Intercept. One piece of information that led to her arrest was the printer identification dots on the leaked documents. As documented by Kalev Leetaru, for decades now, “color printers have included each machine’s unique signature in the form of steganographic Machine Identification Codes in every page they print. Also known as “yellow dots,” most color laser printers print a hidden code atop every page that is invisible to the naked eye, but which can be readily decoded on behalf of law enforcement to identify the precise unique machine that produced the page.”

A screenshot of the NSA report with the colors inverted by the blog Errata Sec

Just like these yellow dots identified the printer used to print these leaked documents, helping to identify Winner as the whistleblower, the digital signatures on images, video or audio recordings could be used by governments (repressive and otherwise), companies, law enforcement, or anyone with access to them to surveil, track and detain people of interest.

Many of the companies interviewed said that they are indifferent to identifying users, and only care about authenticating the media they are submitting. Whatever the intention of these companies, there are many actors who would want to know exactly who the photographer was and how they could usethis technology to find them. Kalev Leetaru goes on to note that, “The digital signature on a secret video recording a politician accepting a bribe could be used to authenticate the video and prosecute the politician or just as easily could be used by a corrupt police official to trace its source and arrest or execute the videographer.”

Verified-at-capture technology has the very real potential to compromise a user’s privacy through unwarranted surveillance. As these technologies emerge and get mainstreamed, they could be susceptible to malicious use by governments, law enforcement and companies. In an article titled “The Imperfect Truth About Finding Facts in a World of Fakes,” Zeynep Tufekci wrote, “Every verification method carries the threat of surveillance.” Already under other forms of surveillance, human rights defenders and citizen journalists documenting abuses within authoritarian regimes might have to make a trade off of their privacy and safety when using this technology to meet increased expectations to verify the content they are capturing.

The level of surveillance can, in part, be mitigated based on the design of the technology. Some of the companies and tool developers interviewed have taken steps to reduce risks on behalf of their users. For instance, Truepic does not capture the device fingerprint as it does not add much value in terms of authenticating a piece of media, and could become a security risk as it could, in theory, be reverse engineered to identify the phone capturing the image.7

There are also questions as to what happens when users make mistakes by using the app imperfectly or inconsistently. For example, Truepic advises its at-risk users not to take a picture of their face, or the inside of their home. But what if this were to happen? It is then not only those capturing the media that might be at risk, but also those around them who were seen within the photo or video, but did not necessarily consent to being in a video with precise metadata recording their location and time stamping when they were there.

In a September 9, 2018 article by Kalev Leetaru entitled “Why digital signatures won’t prevent deep fakes but will help repressive governments”, Leetaru writes, “The problem is that not only would such signatures not actually accomplish the verification they purport to offer but they would actually become a surveillance state’s dream in allowing security services to pinpoint the source of footage documenting corruption or police brutality.”

Mitigation strategies deployed so far

A number of the companies interviewed for this report have been experimenting with different measures to mitigate surveillance risks and build pseudonymity into the technology’s design, such as by not requiring sign-ins whatsoever, or not requiring a real email address. Recognizing that some of their users might be stopped by law enforcement or military groups and their devices searched, many developers and companies have integrated measures to cosmetically mask and/or hide their app.

As discussed above, Truepic advises that those with security concerns should not take photos inside their home, or of their immediate surroundings, in order to keep their identity anonymous. Users can also set the accuracy of the location they wish to share, from local/exact (within 65 meters), to general/city, or choose to provide no information at all. Truepic’s default setting is private, meaning it is hidden and inaccessible until the user decides to share information. Be Heard, a project founded by SalamaTech, an initiative of SecDev Foundation, created a number of security-best practices for Arabic speakers using Truepic. They also advise deleting the app when crossing borders or checkpoints. Users can re-download and log into the application at anytime in the future and have their images restored.

Yemeni human rights advocates using the app Tella reported that they “were so scared and frightened that the technology and the metadata would have them identified and located that they turned that off completely.”8 As Tella focuses on modularity, they were able to adjust this feature and noted that “you are talking about contexts where people are completely fearful and we need to respect that and we need to give them the option to take that piece out.”9 Proofmode enables location capturing only if a user turns on the location capturing services. While this approach gives users a clear way to opt in and out of capturing location information, it also requires a certain level of education and clear communication of the options, and does not allow for mistakes as there is no safety net if a user forgets to turn location capturing on or off.10

While anyone can download the eyeWitness to Atrocities app and upload information directly to their servers, the organization behind the app often works directly with organizations and collectives with whom they have established a trusted relationship, and in most instances, with whom they have signed a written agreement. They generally partner with activist collectives, civil society organizations, NGOs and litigation groups, and in many cases travel to the location of the documentation groups that will be using their app. They not only provide the technology, but also help develop documentation plans and offer expertise in using photo and video for accountability purposes. eyeWitness to Atrocities is often introduced to users by a mutual contact, through a secure channel, and from there begins a process of building trust and forming partnerships that can take up to a year. This process may require multiple in person meetings so eyeWitness understands what the group wants to document and why, the risks they might encounter, and their current capacity as well as the capacity they will need in order to meet their goals.11 If needed, they may work with other partners who possess additional knowledge and litigation counsel.12

Amber takes a novel approach in order to enhance the privacy of both those being filmed and those using their technology. By breaking the recorded video down into segments, Amber allows the user to share only the segment or segments they wish to distribute, rather than the entire video. These segments will be assigned their own cryptographic hash as well as a cryptographic link to the parent recording. For instance, a CCTV camera is recording constantly during a 24-hour period; however, the footage of interest is only 15 minutes in the middle of the recording. Rather than sharing the entire 24 hours of footage, Amber can share just the relevant segment with interested stakeholders. As these segments are cryptographically hashed, the stakeholders will be able to confirm that, apart from a reduction in length, nothing else has been altered from the longer 24-hour parent recording.13

Moving forward, companies could build a data escrow function, where basic verification information could be publicly available, or provide public acknowledgement that verification data exists around a particular piece of media. Then, an interested party could request more information that the user can choose to comply with or not. The usefulness of this will depend on how much the users trust the companies offering the service and the security of their archives.

A key question to ask here is can, and how, will users be able to opt-out? Hany Farid likens it to a camera’s flash function, “where you have the option, like turning a flash on and off, to securely record and not securely record.”

The difference with flash is that users are able to visually understand what flash does, why it is important (in order to lighten up a dark scene), and can easily see if it is turned on or off (a bright light will shine or not); there are no great risks if you were to forget to turn the flash on or off. It is not the same for these verified media tools. It is hard to communicate what they do, easy to make mistakes when using them, and there could be serious repercussions from accidentally leaving verified capture turned off or on, resulting in media not having necessary additional data attached to it, or unintentionally adding sensitive information to circulating media.

Social media platforms and privacy

In a fairly contained environment of a smartphone app, these privacy-protecting measures can work to some success. But if these verification technologies become widely-integrated within social media platforms, they will become less successful as the amount of data associated with each piece of media expands to include social media profiles. There are real risks when disparate pieces of information combine and take on unintentional significance; this is known as the mosaic effect. If platforms add more metadata to the multimedia being posted on their platforms they will have to assess how much metadata to publish, and how much risk they are willing to take with people’s privacy and safety. Like the advocates from Yemen, people in high-risk places are aware of the risks involved in sharing information and are reluctant to pick up tools that could become a safety concern.

Currently, social media platforms strip most metadata from the images and videos that are uploaded to their services. They have never been totally transparent as to why, but privacy concerns and liability risks are two decent guesses. We do know, however, that social media companies keep this metadata, as it has been requested by courts of law. If verification technology does get implemented into these platforms, then this potentially-identifying information is not only vulnerable to hacks, but also can be requested by subpoenas or court orders, leading governments or law enforcement to an activist’s location. Aside from hacks and breaches, social media platforms will have to decide what information to provide to users about a “verified” image or video: too much and there will be privacy consequences; too little, and the confirmation provided will be a black box binary decision — “trust” or “don’t trust” ― with no context as to the basis of the verification.

QUESTIONS TO CONSIDER

- Companies: What level of identifying information should users be required to provide?

- Companies: What provisions are built into the design of the tools if someone uses them imperfectly or inconsistently?

- Companies: Can users decide to opt in or out? And is it clear how to do this?

- Companies: Are there modularity options built within the app for users who have privacy concerns and want to opt-out of particular metadata being captured?

- Companies: Who will have access to the data? Who would have third-party access to the data? Can users delete or port their data?

- Companies: What support concerning subpoena or legal threats do you offer your users?

- Companies: What level of corroborating verification data will you provide and what level of explanation as to how a verification confirmation is provided?

RELATED DILEMMAS

Dilemma 3:

Voices could be both chilled and enhanced

Authoritarian leaders are mounting campaigns that target people’s belief that they can tell true from false. “Fake news” is becoming an increasingly common reply to any piece of media or story that those in power dislike, or feel implicates them in wrong-doing, and new media-restrictive laws and regulations, as well as requirements for social media platforms and news organizations, are being proposed in democracies and authoritarian countries alike.

Verified capture is a valuable tool for critical media

In this environment, verified media capture technology can be used to improve the verifiability of important human rights or newsworthy media taken in dangerous and vulnerable situations by providing this essential and sensitive media with additional checks of authenticity. Fifteen-year-old Syrian Muhammad Najem used Truepic to capture a 56-second video in which he asks US President Trump, in English, to send international observers to protect civilians in Idlib province. In this message he states, “I know you hate fake news, that’s why I recorded this authentic and verifiable video message for you.” Muhammed, who has 25,000 followers on Twitter, then included a link on this tweet to the Truepic verification page for his video. Muhammad had received significant pushback on his Twitter account in the past, accusing him of lying about his location, so he began using Truepic to verify where and when he captured the videos he was posting. His video was then trusted and disseminated by mainstream media networks like Al Jazeera.

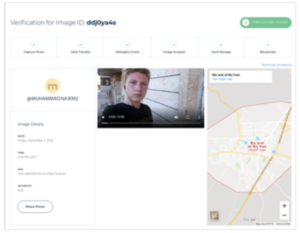

Screenshot from Truepic’s verification page for a video recorded by Muhammad Najem

Another public example is Dimah Mahmoud, who encouraged those documenting any protest, rally or talk in Sudan in January 2019 to do so via Truepic, and included a video in Arabic on how to use the app. WITNESS, in its own work on citizen media as evidence has consistently seen how ordinary people and activists want to have options for sharing more trustworthy media (as well as options to be anonymous).

Those working to report alternative narratives against repressive governments are often looking to add more information to the media they are capturing to ensure that the evidence they are recording, often in risky situations, is trusted and admissible to courts of law. With the rise of open source investigations and the increased amount of digital evidence being used in courts of law, those capturing the footage want to share content that can be held up to scrutiny.

But those same activists and journalists have safety and privacy concerns

Journalists and activists have legitimate concerns in terms of these tools becoming another form of surveillance. For those who are already distrustful of their governments and of their phones, they may choose not to share the documentation they capture, or not capture important footage at all, if concerned that they might be tracked. When they use the tools inconsistently or imperfectly, then the tools themselves might be weaponized against them. Journalists and activists who do not use these apps for fear of surveillance may find their voices further dismissed.

Those who have their phones checked at military checkpoints or by law enforcement run the risk of these applications being found on their device. This invites questions by authorities concerning the videos and images that have been captured and for what purpose. Many of the human rights facing apps assessed for this report have taken steps to change the icon, create passwords and store data away from the central camera roll; however, if someone were to dig a little deeper, these apps could be relatively easily detected.

Tools risk being co-opted by social media and “fake news” regulation

There is an increasing global trend of decision makers regulating social media and “fake news.” Under such circumstances, it is easy to find scenarios in which either governments require authenticated media, or platforms themselves default to such regulation. Similarly, increased regulation might be imposed as a requirement for media to be considered a “legitimate” news outlet. For instance, social media journalists and bloggers who reach a certain number of followers in Tanzania must register with the government and pay roughly two million Tanzanian shillings in registration and licensing fees. The Electronic and Postal Communications (Online Content) Regulations that came into effect in 2018 also forbid online content that is indecent, annoying or leads to public disorder. This new regulation has forced many content creators who cannot afford the fees offline.

Similar regulatory abuse and government compulsion around verifiable media could lead to the silencing of dissonant voices or views that could counter government narratives, especially if social media platforms or news outlets are regulated so they can only operate if they introduce this kind of technology. This could lead to companies pulling out of countries that impose such restrictions, preventing independent observers and monitors from operating in such places, and leaving the free press struggling worldwide.

Newsrooms face pressure to “not get it wrong”

It is not only those with privacy concerns that could suffer from this chilling effect, but also news organizations who may be reluctant to take risks or report rapidly on real events for, as Daniel Funke writes, “fear that the evidence of them will turn out to be fake.” News organizations might report on something later proven to be faked, or become the target of a sting, or of a malicious actor trying to spread distrust or cause distress. In their paper Deep Fakes: A Looming Challenge for Privacy, Democracy and National Security, Robert Chesney and Danielle Keats Citron note that “Without a quick and reliable way to authenticate video and audio, the press may find it difficult to fulfill its ethical and moral obligation to spread truth.” Conversely, to protect themselves, news organizations may choose not to use audiovisual material that does not have additional metadata attached.

QUESTIONS TO CONSIDER

- Policy or decision makers: Consider how to ensure that both legal regulations and platform obligations around technical signals for authenticity do not weaponize these signals against journalists and news outlets.

RELATED DILEMMAS

Dilemma 4:

Visual shortcuts: What happens if the ticks/checkmarks don’t work, or if they work too well?

In the wake of concerns around the spread of fake news, a host of mobile applications and web browser extensions have been released, all designed to detect stories that contain falsehoods. In late 2017, the Reporters Lab published a report that found at least “45 fact-checking and falsehood-detecting apps and browser extensions available for download.” Many of the apps analyzed in the Reporters Lab report use a color-coded system to denote the bias of each media source.

Having a simple color-coded system to indicate whether a piece of media can be trusted or not is not dissimilar to the outputs of multimedia authenticity apps. Implementing a traffic light system that indicates to the user what is “real” or “fake” could easily be imagined. In addition to color systems, tags such as “Disputed” or “Rated False,” or simply a tick/checkmark or a cross, can be used to indicate trust. While this approach could regain the general public’s trust in visual and audio material, as internet users are increasingly coming into contact with such marks, various concerns with this approach arise.

Media that is “real” but misrepresented

In many misinformation and disinformation campaigns, authentic, untampered video is being used inauthentically. The most common falsified content that WITNESS encounters in its work running video verification and curation projects based on online content is genuine, but deliberately mis-contextualized video which is recycled and used in new contexts. In a report published in May 2019 by DEMOS, they found that “focusing on the distinction between true and false content misses that true facts can be presented in ways which are misleading, or in a context where they will be misinterpreted.” For example, an image of a flooded McDonald’s went viral after hurricane Sandy hit the US in 2012. This was a real picture, but not one taken during the hurricane; rather, it came from a 2009 art installation called “Flooded McDonald’s” that was miscaptioned and misrepresented.

As Kalev Leetaru writes, “Adding digital signatures to cell phone cameras would do nothing to address this common source of false videographic narrative, since the issue is not whether the footage is real or fake, but rather whether the footage captures the entire situation and whether the description assigned to it represents what the video actually depicts.” This misrepresentative media would be flagged as “real,” which it is, giving media consumers a false sense of confidence that it can be trusted instead of encouraging them to investigate the media further and check if it’s being recontextualised.

Authentication visual shortcuts could be seen as an endorsement

Social media platforms have well-documented issues with their verification programs. Both YouTube and Twitter face a similar issue: the ticks they assign particular accounts are seen as an endorsement rather than a confirmation of a user’s identity. Twitter paused their verification program in 2017 after controversially giving a known white nationalist in the US a blue verification checkmark. In a recent blog post by YouTube, they announced that they were changing the design of their program. “Currently, verified channels have a checkmark next to their channel name. Through our research, we found that viewers often associated the checkmark with an endorsement of content, not identity. To reduce confusion about what being verified means, we’re introducing a new look that helps distinguish the official channel of the creator, celebrity or brand it represents.” This new look is a gray banner as seen below. It seems likely that social media companies will come across the same issue with authentication checkmarks, where ticks, marks, or gray banners are seen as an endorsement of the content rather than simply a mark indicating that a piece of media has been authenticated.

Ticks could discourage a skeptical mindset

There are two known processes that occur in the brain in terms of making decisions: System 1 and System 2 thinking. These fact-checking apps take advantage of System 1’s fast, often unconscious, decision-making capabilities, as they require little attention. System 2 thinking, in comparison, is slower and controlled, requiring closer scrutiny. You can read more about how System 1 and System 2 thinking works in the context of fake news in this article by Diego Arguedas Ortiz titled “Could this be the cure for fake news?”.

Many of the proposed solutions being discussed in this report tap into System 1 thinking, whereas System 2 thinking would likely be much more effective in these scenarios. This is echoed by various scientific reports that when it comes to debunking information, it’s “useful to get the audience in a skeptical mindset.” These visual shortcuts could become an unnecessary crutch rather than a true aid to someone’s thinking. The requirement that multimedia be captured by using these authenticity apps, or by using a particular technology, could also work towards fostering a culture of disbelief, where human networks of trust are replaced by technical understandings of trust.

The spillover effect

In a 2017 study by Dartmouth University, which attempted to measure the effectiveness of general warnings and fact-check tags, it was found that while false headlines were perceived as less accurate when they were accompanied by a warning tag, no matter the respondents’ political views, and that exposure to a general warning decreased belief in the accuracy of true headlines. This led the study to conclude that there is “the need for further research into how to most effectively counter fake news without distorting belief in true information.”

This study observed a spillover effect where warnings about false news primed people to distrust any articles they saw on social media. This can be interpreted as a “tainted truth” effect, where those who are warned about the influence of misinformation overcorrect for this threat and identify fewer true items than those who were not warned.

Once there is a tick on an image that states a piece of media is “fake,” then what? How would you discourage people from sharing this content? It is critical to address how people understand what it means to have a “fake” tag, and, as identified by the WITNESS/Partnership on AI and BBC convening place this in the broader context of how and why people share known false information as well as prioritize better research on how to communicate falsehood.

If these multimedia authenticity checks are indeed rolled out to be part of messaging apps and large social media platforms, these quick indicators of trust could do the exact opposite of what they were designed to do, and end up both discouraging investigation and scrutiny and bringing accurate information into question. Integrating software to decide whether something is “real” or not is just one method among a variety of approaches, and one that leaves much more to be done in terms of regaining levels of trust beyond technical indicators.

QUESTIONS TO CONSIDER

- Companies: Consider the effects of the warning labels you choose to add to the media.

- Companies and tool developers: How to manage mis-contextualized or mis-framed “real” media?

RELATED DILEMMAS

Dilemma 5:

Visual shortcuts: Authenticity infrastructure will both help and hinder access to justice and trust in legal systems

“If we become unable to discover the truth or even to define it, the practice of law, and functional society, will look much different than they do today.”

On September 21, 2018, a military tribunal in the Democratic Republic of the Congo (DRC), condemned two commanders for crimes against humanity. As part of this trial, videos were used as evidence for the first time ever in the DRC. TRIAL International and WITNESS worked together to train lawyers working on the case on the best practices of capturing and preserving video, and worked with eyeWitness to Atrocities to use their eyeWitness app to verify that the footage being captured had not been tampered with.

“During the investigatory missions, information was collected with the eyeWitness app to strengthen evidentiary value of the footage presented in court,” says Wendy Betts, Project Director at eyeWitness to Atrocities. “The app allows photos and videos to be captured with information that can firstly verify when and where the footage was taken, and secondly can confirm that the footage was not altered. The transmission protocols and secure server system set up by eyeWitness creates a chain of custody that allows this information to be presented in court.” “When the footage was shown, the atmosphere in the hearing chamber switched dramatically,” testified Guy Mushiata, DRC human rights coordinator for TRIAL International. “Images are a powerful tool to convey the crimes’ brutality and the level of violence the victims have suffered.”

This is an example of how additional layers of verification and authentication can aid in access to justice. While in some jurisdictions video has been used in trials for years, in others, like the DRC, it is new, and judicial systems are working on developing rules for admissibility.

The rules dictating procedure around the introduction of digital evidence in courts of law, in the US at least, are, as Hany Farid describes, “surprisingly lax.”14 Depending on the type of legal system in place, experts are hired by either the courts or the opposing sides, and must present their arguments, or answer questions posed by the court. In other jurisdictions, the approach differs. In Egypt, Tara Vassefi writes for a report on Video as Evidence in the Middle East and North Africa, “There is a technical committee under the umbrella of the State Television Network, also referred to as the Radio and Television Union (Eittihad El’iidhaeat w Elttlifizyun Almisri), which is responsible for authentication and verification of video evidence. Generally, if the opposing party contests the use/content/authenticity of video evidence, the judge refers the video to this committee for expert evaluation.” Tara goes on to note that though Egypt has a committee that is responsible for authentication and verification of video evidence, this issue is still subject to the discretion of the judge, which means it can be used as a political tool to impact the outcome of a case. In Tunisia, video evidence is used at the discretion of the judge, who can “simply deem the video evidence inadmissible and rely on other forms of evidence.”

Visual material is highly-impactful when displayed in courts of law, and in most jurisdictions, has a relatively low bar of admissibility in terms of questions around authenticity and verifiability. Tara Vassefi, in her research into video as evidence in the US, argues that “electronic or digital evidence is currently ‘rarely the subject of legitimate authenticity dispute,’ meaning that legitimate authenticity disputes are not coming to the fore as lawyers and judges are using digital evidence.” We must now ask in what direction the actual and perceived increase of synthetic media and other forms of video and audio falsification will lead courts in determining the authenticity of media, both in courtrooms accustomed to using photos and videos as evidence, as well as in courtrooms where such media is a novelty.

Deepfakes, or synthetic media, could be introduced in courts of law. And if they are not introduced, their existence means that anyone could stand in a court of law and plausibly deny the media being presented. Other challenges to using video and images as legal evidence may be more mundane, and grounded in increasing skepticism around image integrity. In the high-profile divorce case between the actors Johnny Depp and Amber Rudd, Depp is claiming that the photos showing domestic abuse of Rudd are fake. “‘Depp denies the claims and images purported to show damage done to their property in an alleged fight contain ‘no metadata’ to confirm when they were taken,’ said his lawyer Adam Waldman.”

Depp’s claim that these images were fake, as they “contain no metadata,” could be an increasingly common argument. In this dilemma, we look at the implications of higher expectations of forensic proof in terms of resources, privacy and societal expectations.

The implications of higher expectations of forensic proof

If societies’ awareness and concerns around synthetic media grow, or if more authentication and verification is required, then there is the potential of what Associate Director of Surveillance and Cybersecurity at Stanford’s Center for Internet and Society, Riana Pfefferkorn, refers to as a new flavor of an old threat, a “reverse CSI effect.”15

The CSI effect refers to the popular crime drama television series, Crime Scene Investigation (CSI). Mark A. Godsey describes this effect in his article “She Blinded Me with Science: Wrongful Convictions and the ‘Reverse CSI Effect.’” “Jurors today, the theory goes, have become spoiled as a result of the proliferation of these ‘high-tech’ forensic shows, and now unrealistically expect conclusive scientific proof of guilt before they will convict.” In this context, the CSI effect might refer to juries and judges expecting advanced forensic evidence in order to trust any multimedia content being presented to them.