FOR MORE INFORMATION: medialab@witness.org

A Twitter analysis by the WITNESS Media Lab shows how manipulation of image content and context online is used for anti-immigrant propaganda

EXECUTIVE SUMMARY

2018 Google News Initiative Fellow

Our newest project, “Online Manipulation of Visual Content for Anti-Immigrant Propaganda,” analyzes close to a million tweets from a week during the crisis at the US-Mexico border. The project captures how political content, specifically images, gets re-contextualized and leveraged as anti-immigrant propaganda on social media. This project was produced by Erin McAweeney as a part of the 2018 Google News Fellowship with the WITNESS Media Lab, and under the “Eyes On ICE: Documenting Immigration Abuses” project examining the challenges and possibilities of using video to document encounters with Immigrations and Customs Enforcement in the U.S for justice. The following is a summary of the project and findings. For a more detailed look at the project please download the full report using the button above.

This research is an inquiry into how online activism and images related to immigration justice can be manipulated and used to harass individuals or groups, spread false information, and incite hate. Over the span of a week, we collected and analyzed close to one million tweets related to the ongoing, contentious immigration debate in the United States and identified trends and case studies of image appropriation and re-contextualization patterns on social media. We enriched this analysis through interviews with WITNESS’ immigrant rights partners, which further demonstrate how the fight for social justice has both benefited from social media, as well as been disrupted by digital disinformation.

Amid the evolving mire of online information practices, visual content has surfaced as an exceptionally useful and reliable tool in protecting and defending human rights. Activists and the organizations supporting them, including WITNESS, have long demonstrated the value of visual media in the fight for justice. As access to mobile phones rises and social media plays an increasingly pervasive role in all of our lives, we have seen how media can serve as authentic documentation of violations and a catalyst for social change. These trends have enabled activists to circumvent hierarchical power structures and share the stories of marginalized groups, creating a new era of online human rights activism driven by visual content.

Meanwhile, visual mis- and disinformation, both in context and content, have become exceedingly prevalent online, including the threats of progressively sophisticated synthetic media, “deep fakes”, which pose potential social, economic, and political threat (World Economic Forum, 2017). If the authenticity of visual content is called into question, communities that rely on accurate documentation of human rights injustices — such as the ones WITNESS engages with on a daily basis — will find their lives and well-being at risk. While the participatory nature of social media has been proven to help individuals foster political will and organize with a collective purpose, bad actors have increasingly exploited the lower barriers of entry online to propagate new narratives and disinformation surrounding activist-generated visual content. Despite the increasing availability of effective and accessible means of visual content manipulation, journalistic and platform responses to ‘fake news’ issues have predominantly focused on text-based mis/dis/mal-information and text-based responses.

This project studies visual content created and shared by activists online in response to recent United States’ immigration policies and Immigration and Customs Enforcement (ICE) activities. This study intends to map the evolving contextual narratives surrounding visual content online as users, both those in support of and opposed to current immigration policies, negotiate the interpretations and contents of images and videos. The Twitter data poses limitations on the conclusions that can be drawn concerning the qualitative experiences of activists and immigrants using social media, so interviews were conducted to supplement the quantitative analysis. These interviews serve as anecdotal data to enrich the stories told by the tweet data alone.

This research intends to answer the following questions:

- What common trends in conversational patterns on social media can we identify that develop around images and videos in a social movement context?

- What are the more granular patterns in how individual users interact with these images and videos as they deliberate on the content and context of visual content, otherwise known as framing (1)?

- How does misinformation develop and grow from the aforementioned framing negotiations of visual content?

- Framing: In the study of social movements, framing is a process that enables groups of people to understand, remember, evaluate, and act upon a problem.

photos

videos

GIFs

925,787 tweets (98%) came from unverified sources, and the remaining 17,513 (2%) came from verified sources(2). 52% of the verified tweets were retweets, 9% were quotes, and 39% were original. Comparatively, for the unverified tweets, 81% were retweets, 8% quotes and 11% original. Overall, 22,898 tweets had at least one native piece of visual content (e.g. photo, movie, GIF), and the number of tweets with more than one piece of media decreased respectively until the maximum fourth image allowed per tweet. The total visual content count was comprised of 21,036 photos and 1,600 videos, and the remaining 262 were GIFS. The dataset also contained 80,030 tweets that were retweets of visual content and 57,715 quotes of visual content.

943,300

TWEETS

analyzed

53

PIECES OF METADATA

for each tweet (such as tweet and user ID numbers, hashtags and URLs)

759,397

RETWEETS

68% of the total number of tweets analyzed

111,800

ORIGINAL TWEETS

10% of the total number of tweets analyzed

2. Admittedly, this is not a flawless distinction, since the denomination was originally reserved for only ‘elite’ users, but Twitter has since made the application available to anyone willing to put the time into the lengthy verification process. Nonetheless, Twitter maintains that there are accounts of “public interest” and generally “maintained by users in music, acting, fashion, government, politics, religion, journalism, media, sports, business, and other key interest areas”.

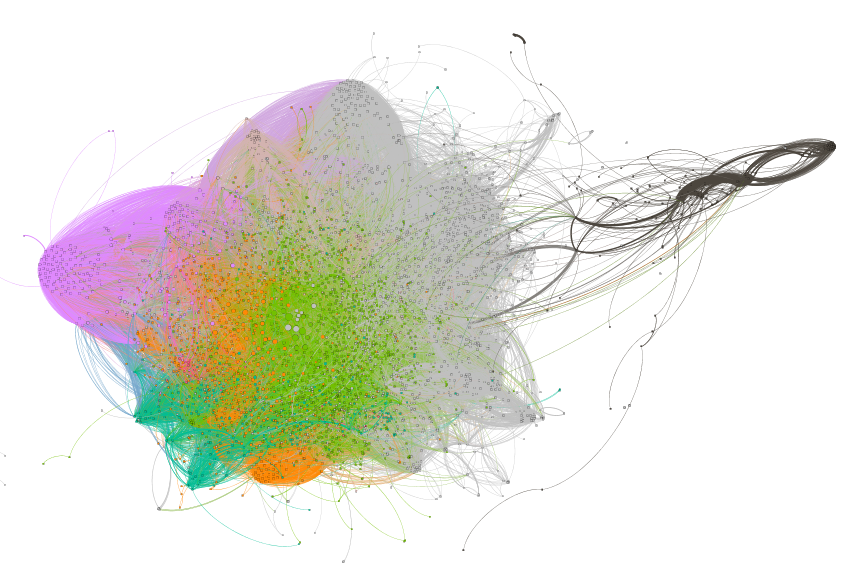

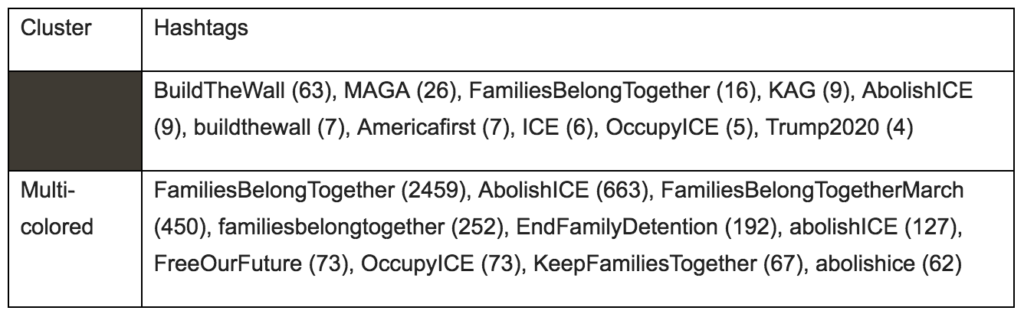

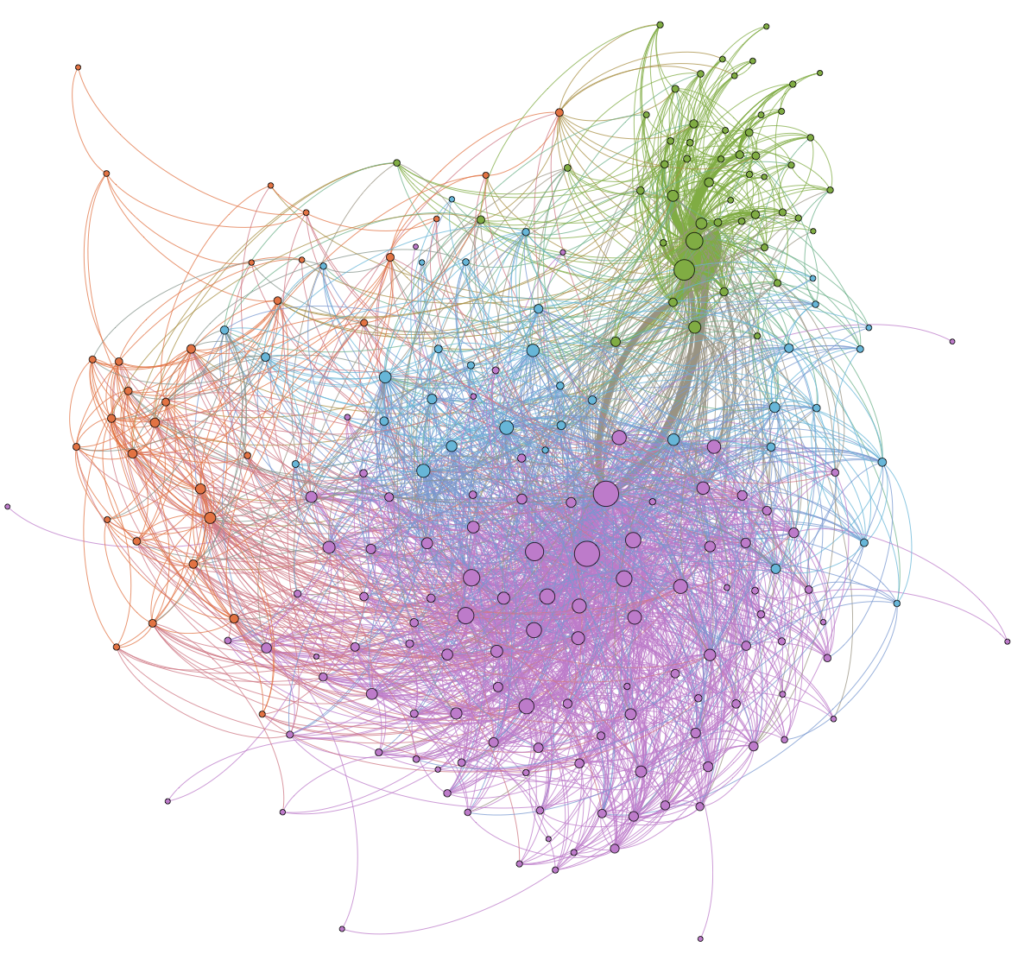

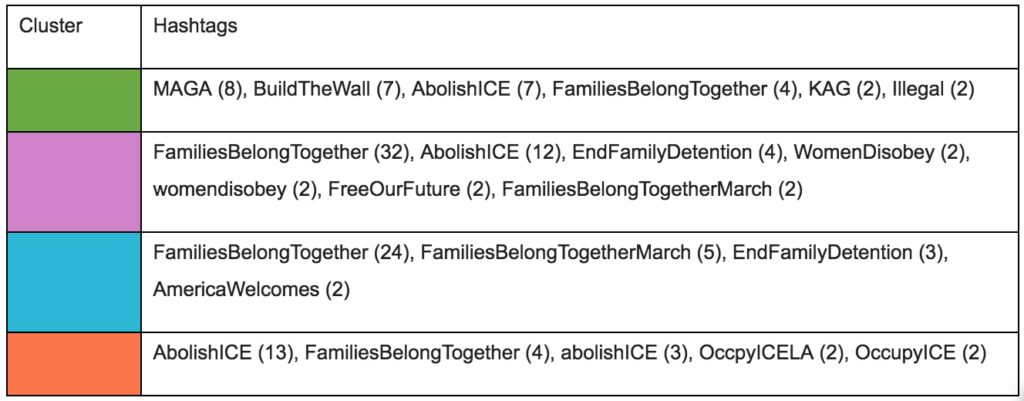

Visualizations helped make sense of the network structure of the collected Twitter data. Figure 1 and 2 represent networks of shared content in which images (nodes) are connected if a user shared both of them. Figure 1 shows shared retweets of images (two images connected by the same user if the user retweeted both images), and figure 2 shows whether an image was quotes of images (two images connected by the same user if the user quoted both images). Notably, there are two distinct clusters in Figure 1 in which images were generally siloed, whereas images that were quoted had shared users from different types of clusters. The homophily (clustering based on shared beliefs) amongst clusters was made evident by the corresponding tables of hashtags that were most common within each cluster.

Figure 1. Network of visual content with shared retweeting users from immigration rights protests

Table 1. Most used hashtags in clusters of retweeted visual content

Figure 2. Network of visual content with shared quoting users from immigration rights protests

Table 2. Most used hashtags in clusters of quoted visual content

of users were verified, but more than twice as likely to share visual content

visual content drives engagement

The analysis demonstrates that not all media is interacted with equally. Photos were over 13 times more likely to be shared in the dataset, but the engagement with video –specifically retweets — was significantly higher. The majority of media were photos, ranging from 72-81% photos in each group.

quoting changes meanings

Narratives generated by other users through popular quoting of the original images did not always align with the intent of the original content and often ran contrary to the initial framing, which we identified as ‘counter-narratives’. For example, a tweeted photo that was originally intended to communicate solidarity with the protest marches was quoted to mock the pictured protesters and warn of impending ‘communism.’ The same image with the new quote context was then retweeted more than the original, eventually becoming more prominent online than the original narrative subscribed to the image. In total, there were five individual instances where a counter-narrative rose to prominence over the original tweet while co-opting the visual content (see full report for more details).

narratives from anti-immigrant sources are hardly contested

Popular visual content from the cluster of anti-immigrant sources came from the account AlwaysActions which consists of mugshots from ‘illegal aliens’, as they are referred to in the tweets, that had committed allegedly horrendous crimes. Accordingly, common narratives that surfaced through content analysis, both of the original text and subsequent quotes, revolved around safety, truth, and the fear of open borders. The original frames of these images remained generally uncontested in proceeding quotes of the image, and 95% of high-volume preceding quotes were in solidarity with the original narrative.

visual content gets co-opted by opposing groups

Other popular tweets of visual content originated from left-leaning activists and were co-opted by identified anti-immigrant communities, to the extent that they were grouped into the same community (Figure 2 – the green cluster) during the network analysis. For example, an image of a mother and her children with protest signs originally shared from her account was re-contextualized by anti-immigrant users to spread defaming comments about the woman’s appearance and the choice to bring her children to a rally.

of counter-narratives of visual content were created by unverified sources than verified

historical anti-immigrant propaganda tactics

Tactics reminiscent of historical practices of anti-immigrant propaganda were observed in proliferating counter-narratives, including stereotypes, harassment, and calls for violence.

misinformation builds a following

All instances of prominent counter-narratives were propagated through members with significantly higher audience and reached a larger audience than the original image.

image manipulation attempts to discredit immigrant rights efforts

from digital hate speech to physical violence

The evidence of physical violence, incited by false narratives, malicious tactics, and mobilizing on social media carrying over into physical spaces is the highest concern for activists.

self-censorship effect

While organizations iterated that they felt comfortable in their ad hoc strategies for managing attacks, individuals from their communities are more frequently targeted after engaging with pro-immigrant content, which fettered overall participation and self-advocacy.

intimidation of marginalized voices perpetuates their digital exclusion

Although platform responses have begun to take shape, immigration activists believe these solutions don’t take into account those who are most affected by malicious online practices. Thus, allies, who are less subjected to harassment and intimidation than immigrants, are more likely to advocate for immigration rights online. If those most affected are reluctant to participate in digital spaces, then marginalized voices are still left out of platform and policy solutions.

In various forms, every finding in this study underlines the power of prominent figures in shaping how we perceive the images presented to us. Given the rapid exposure and quick emotional response to visual content, it’s clear that images particularly lend themselves to recontextualization and amplification in counter-narratives by key influencers online – whether or not that account is verified or the new narrative matches the image. This makes images especially predisposed for contextual misinformation. The following recommendations are for practitioners and activists who use social media for human rights initiatives, based on the evidence uncovered in this report.

There’s not enough evidence in this study to conclusively recommend one medium over the other. That said, videos receive significantly higher engagement and, of all the images that facilitated the rise of counter-narratives in this dataset, only one was video. Verified sources were also twice as likely to share videos over unverified sources, suggesting they are a preferred medium for more prominent sources.

All participants had experience with small-scale attacks, but strategies were premeditated for combating larger attacks. It’s important to know answers to questions like: When do you engage with the attacker? When should you join a conversation to support a community member? When do you report an account? Organizations addressed small-scale attacks ad hoc but given the quick spread of counter-narratives found in this study, a predetermined plan could temper an attack that could escalate in just a few hours.

Harmful attacks will come from accounts with large followings and have enough clout to convince people that their narrative about media is more correct than the original information. Solidarity with partner organizations and other users is the best tactic against misinformation.

Most accounts are unverified, so making generalizable claims about the behaviors of unverified and verified users is difficult. That said, unverified sources in this study were more likely to create counter-narratives around visual content and content generated by community members. If there is a particularly suspect account that follows or has friended your organization’s social media, be sure to monitor their activity as a preemptive layer of protection before an attack.

Since attacks often targeted users with small followings, most victims were single users and not large organizations. For immigrant rights organizations with a larger audience, if there is an individual whose media has been targeted or mis-contextualized online, simply correcting the context and misinformation on an organizational account can go a long way in combating misinformation.

If the target audience is not directly engaging, but instead allies that feel safer advocating online, then supporting and standing by individuals mentioned in #3 could lead to more community engagement. It’s important to have allies, but creating digital spaces where people feel safe to advocate for themselves leads to more diverse conversations and solutions.

Given the rampant nature of sexism, racism, and calls for violence online, harassment around photos and videos are not exempt from this trend. Warn community members about the risk of harassment that may stem from sharing photos and videos about contentious topics like immigration rights and disseminate tips on how to address it. Several participants noted that attacks tend to develop around specific events, like marches or protests, and around social justice issues circulating in the news at the time.

Solutions aren’t comprehensive until they represent those most affected, and take into account their needs. Community members and the organizations that represent them need to advocate for their digital well-being so the stories of marginalized voices can rise to prominence.